A systematic literature review to identify and classify software requirement errors

Gursimran Singh Walia

a

, Jeffrey C. Carver

b,

*

a

Mississippi State University, Department of Computer Science and Engineering, 300 Butler Hall, Mississippi State, MS 39762, United States

b

University of Alabama, Computer Science, Box 870290, 101 Houser Hall, Tuscaloosa, AL 35487-0290, United States

article info

Article history:

Received 7 December 2007

Received in revised form 27 January 2009

Accepted 29 January 2009

Available online 5 March 2009

Keywords:

Systematic literature review

Human errors

Software quality

abstract

Most software quality research has focused on identifying faults (i.e., information is incorrectly recorded

in an artifact). Because software still exhibits incorrect behavior, a different approach is needed. This

paper presents a systematic literature review to develop taxonomy of errors (i.e., the sources of faults)

that may occur during the requirements phase of software lifecycle. This taxonomy is designed to aid

developers during the requirement inspection process and to improve overall software quality. The

review identified 149 papers from the software engineering, psychology and human cognition literature

that provide information about the sources of requirements faults. A major result of this paper is a cat-

egorization of the sources of faults into a formal taxonomy that provides a starting point for future

research into error-based approaches to improving software quality.

Ó 2009 Elsevier B.V. All rights reserved.

Contents

1. Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1088

2. Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1088

2.1. Existing quality improvement approaches . . ........................................................................ 1088

2.2. Background on error abstraction . . . . . . . . . . ........................................................................ 1089

3. Research method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1089

3.1. Research questions . . . . . ........................................................................................ 1089

3.2. Source selection and search . . . . . . . . . . . . . . ........................................................................ 1090

3.3. Data extraction and synthesis . . . . . . . . . . . . ........................................................................ 1091

4. Reporting the review . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1092

4.1. Question 1: Is there any evidence that using error information can improve software quality?. . . . . . . . . . . . . . . . . ............... 1092

4.2. Question 1.1: Are there any processes or methods reported in literature that use error information to improve software quality? ...... 1092

4.3. Question 1.2: Do any of these processes address the limitations and gaps identified in Section 2 of this paper?. . . ............... 1093

4.4. Question 2: What types of requirement errors have been identified in the software engineering literature? . . . . . . ............... 1094

4.5. Question 2.1: What types of errors can occur during the requirement stage? . . . . . . . . . . . . .................................. 1094

4.6. Question 2.2: What errors can occur in other phases of the software lifecycle that are related to errors that can occur during the

requirements phase?. . . . ........................................................................................ 1094

4.7. Question 2.3: What requirement errors can be identified by analyzing the source of actual faults? . . . . . . . . . . . . . ............... 1095

4.8. Question 3: Is there any research from human cognition or psychology that can propose requirement errors?. . . . ............... 1095

4.9. Question 3.1: What information can be found about human errors and their classification? .................................. 1095

4.10. Question 3.2: Which of the human errors identified in question 3.1 can have corresponding errors in software requirements? . . . . 1095

4.11. Question 4: How can the information gathered in response to questions 1–3 be organized into an error taxonomy? . . . . . . . . . . . . . 1096

5. Process of developing the requirement error taxonomy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1096

5.1. Developing the requirement error classes . . . ........................................................................ 1096

5.2. Development of requirement error types . . . ........................................................................ 1098

6. Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1100

6.1. Principal findings . . . . . . . ........................................................................................ 1100

6.2. Strengths and weaknesses . . . . . . . . . . . . . . . ........................................................................ 1100

6.3. Contribution to research and practice communities . . . . . . . . . . . . . . ..................................................... 1101

0950-5849/$ - see front matter Ó 2009 Elsevier B.V. All rights reserved.

doi:10.1016/j.infsof.2009.01.004

* Corresponding author. Tel.: +1 205 348 9829; fax: +1 205 348 0219.

Information and Software Technology 51 (2009) 1087–1109

Contents lists available at ScienceDirect

Information and Software Techno logy

journal homepage: www.elsevier.com/locate/infsof

6.4. Conclusion and future work . . . . . . . . . . . ........................................................................... 1101

Acknowledgements .............................................................................................. 1101

Appendix A. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1103

Appendix B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1103

Appendix C . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1104

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1108

1. Introduction

The software development process involves the translation of

information from one form to another (i.e., from customer needs

to requirements to architecture to design to code). Because this

process is human-based, mistakes are likely to occur during the

translation steps. To ensure high-quality software (i.e., software

with few faults), mechanisms are needed to first prevent these

mistakes and then to identify them when they do occur. Successful

software organizations focus attention on software quality, espe-

cially during the early phases of the development process. By iden-

tifying problems early, organizations reduce the likelihood that

they will propagate to subsequent phases. In addition, finding

and fixing problems earlier rather than later is easier, less expen-

sive, and reduces avoidable rework [19,28].

The discussion of software quality focuses around the use of a

few important terms: error, fault, and failure. Unfortunately, some

of these terms have competing, and often contradictory, definitions

in the literature. To alleviate confusion, we begin by providing a

definition for each term that will be used throughout the remain-

der of this paper. These definitions are quoted from Lanubile

et al. [53], and are consistent with software engineering textbooks

[32,67,77] and an IEEE Standard [1].

Error – defect in the human thought process made while trying to

understand given information, solve problems, or to use methods

and tools. In the context of software requirements specifications,

an error is a basic misconception of the actual needs of a user or

customer.

Fault – concrete manifestation of an error within the software. One

error may cause several faults, and various errors may cause iden-

tical faults.

Failure – departure of the operational software system behavior

from user expected requirements. A particular failure may be

caused by several faults and some faults may never cause a failure.

We realize that the term error has multiple definitions. In fact,

IEEE Standard 610 provides four definitions ranging from an incor-

rect program condition (sometimes referred to as a program error )

to a mistake in the human thought process (sometimes referred to

as a human error) [1]. The definition used in this paper more closely

correlates to a human error rather than a program error.

Most previous quality research has focused on the detection

and removal of faults (both early and late in the software lifecycle).

This research has examined the cause–effect relationships among

faults to develop fault classification taxonomies, which are used

in many quality improvement approaches (more details in Section

2.1). Despite these research advancements, empirical evidence

suggests that quality is still a problem because developers lack

an understanding of the source of problems, have an inability to

learn from mistakes, lack effective tools, and do not have a com-

plete verification process [19,28,86]. While fault classification

taxonomies have proven beneficial, faults still occur. Therefore,

to provide more insight into the faults, research needs to focus

on understanding the sources of the faults rather than just the

faults themselves. In other words, focus on the errors that caused

the faults.

As a first step, we performed a systematic literature review to

identify and classify the errors identified by other quality research-

ers. A systematic literature review is a formalized, repeatable pro-

cess in which researchers systematically search a body of

literature to document the state of knowledge on a particular sub-

ject. The benefits of performing a systematic review, as opposed to

using the more common ad hoc approach, is that it provides the

researchers with more confidence that they have located as much

relevant information as possible. This approach is more commonly

used in other fields such as medicine to document high-level con-

clusions that can be drawn from a series of detailed studies

[48,79,92]. To be effective, a systematic review must be driven

by an overall goal. In this review, the high level goal is to:

Identify and classify types of requirement errors into a taxonomy to

support the prevention and detection of errors.

The remainder of this paper is organized as follows. Section 2

describes

existing

quality improvement approaches and previous

uses of errors. Section 3 gives details about the systematic review

process and its application. Sections 4 and 5 report the results of

the review. Finally, the conclusions and future work are presented

in Section 6.

2. Background

To provide context for the review, Section 2.1 first describes

successful quality improvement approaches that focus on faults,

along with their limitations. These limitations motivate the need

to focus on the source of faults (rather than faults alone) as an in-

put to the software quality process. Then, Section 2.2 introduces

the concept of error abstraction and describes the literature from

other fields that is relevant to this review.

2.1. Existing quality improvement approaches

The NASA Software Engineering Laboratory’s (SEL) process

improvement mechanisms focuses on packaging software process,

product and measurement experience to facilitate faster learning

[2,9]. This approach classifies faults from different phases into a

taxonomy to support risk, cost and cycle time reduction. Similarly,

the Software Engineering Institute (SEI) uses a measurement

framework to improve quality by understanding process and prod-

uct quality [36]. This approach also uses fault taxonomies as a basis

for building a checklist that facilitates the collection and analysis of

faults and improves quality and learning. The SEL and SEI ap-

proaches are two successful examples that represent many fault-

based approaches. Even with such approaches, faults still exist.

Therefore, a singular focus on faults does not ultimately lead to

the elimination of all faults. Faults are only a concrete manifesta-

tion, or symptom, of the real problem; and without identifying

the source, some faults will be overlooked.

One important quality improvement approach that does focus

on errors is root cause analysis. However, due to its complexity

and expense, it has not found widespread success [55]. Building

on root cause analysis, the orthogonal defect classification (ODC)

was developed to provide in-process feedback to developers and

1088 G.S. Walia, J.C. Carver / Information and Software Technology 51 (2009) 1087–1109

help them learn from their mistakes. The ODC also uses an under-

standing of the faults to identify cause–effect relationships [16,26].

In addition, fault classification taxonomies have been developed

and evaluated to aid in quality improvement throughout the devel-

opment process [37,43,59].

Empirical evidence suggests that ‘‘quantifying, classifying and

locating individual faults is a subjective and intricate notion, espe-

cially during the requirement phase” [38,53]. In addition, Lawrence

and Kosuke have criticized various fault classification taxonomies

because of their inability to satisfy certain attributes (e.g., simplic-

ity, comprehensiveness, exclusiveness, and intuitiveness) [54].

These fault classification taxonomies have been the basis for most

of the software inspection techniques (e.g., checklist-based, fault-

based, and perspective-based) used by developers

[10,22,25,38,58,75]. However, when using these fault-based tech-

niques inspectors do not identify all of the faults present. To com-

pensate for undetected faults, various supporting mechanisms that

have been added to the quality improvement process (e.g., re-

inspections and defect estimation techniques [5,11,34,84,85]) have

made significant contributions to fault reduction. However,

requirements faults still slip to later development phases. This

fault slippage motivates a need for additional improvements to

the fault detection process. The use of error information is a prom-

ising approach for significantly increasing the effectiveness of

inspectors.

Table 1 summarizes the limitations of the quality improvement

approaches described in this section. To overcome these limita-

tions, the objective of this review is to identify as many errors as

possible, as described by researchers in the literature, and classify

those errors so that they are useful to developers. A discussion of

how the errors can be used by developers is provided in Section

6 of this paper.

2.2. Background on error abstraction

Lanubile et al. first investigated the feasibility of using error

information to support the analysis of a requirements document.

They defined error abstraction as the analysis of a group of related

faults to identify the error that led to their occurrence. After an er-

ror was identified, it was then used to locate other related faults.

One drawback to this process is that the effectiveness depends

heavily on the error abstraction ability of the inspectors [53].To

address this problem, our work augments Lanubile et al.’s work

by systematically identifying and classifying errors to provide sup-

port to the error abstraction process.

In addition to research reported in the software engineering lit-

erature, it is likely that error can be identified by reviewing re-

search from other fields. Given that software development is a

human-based activity, phenomena associated with the human

mental process and its fallibilities can also cause requirements er-

rors. For example, two case studies report that human reasons, i.e.,

reasons not directly related to software engineering, can contribute

to fault and error injection [55,86]. Studies of these human reasons

can be found in research from the fields of human cognition, and

psychology. Therefore, literature from software engineering and

psychology are needed to provide a more comprehensive list of er-

rors that may occur.

3. Research method

Following published guidelines [18,30,48], this review included

the following steps:

(1) Formulate a review protocol.

(2) Conduct the review (identify primary studies, evaluate those

studies, extract data, and synthesize data to produce a con-

crete result).

(3) Analyze the results.

(4) Report the results.

(5) Discuss the findings.

The review protocol specified the questions to be addressed, the

databases to be searched and the methods to be used to identify,

assemble, and assess the evidence. To reduce researcher bias, the

protocol, described in the remainder of this section, was developed

by one author, reviewed by the other author and then finalized

through discussion, review, and iteration among the authors and

their research group. An overview of the review protocol is pro-

vided here, with a complete, detailed description appearing in a

technical report [91].

3.1. Research questions

The main goal of this systematic review was to identify and

classify different types of requirement errors. To properly focus

the review, a set of research questions were needed. With the

underlyin

g

goal of providing support to the software quality

improvement process, the high-level question addressed by this

review was:

What types of requirements errors can be identified from the liter-

ature and how can they be classified?

Motivated by the background research and to complement the

types of errors identified in the software engineering literature,

this review makes use of cognitive psychology research into under-

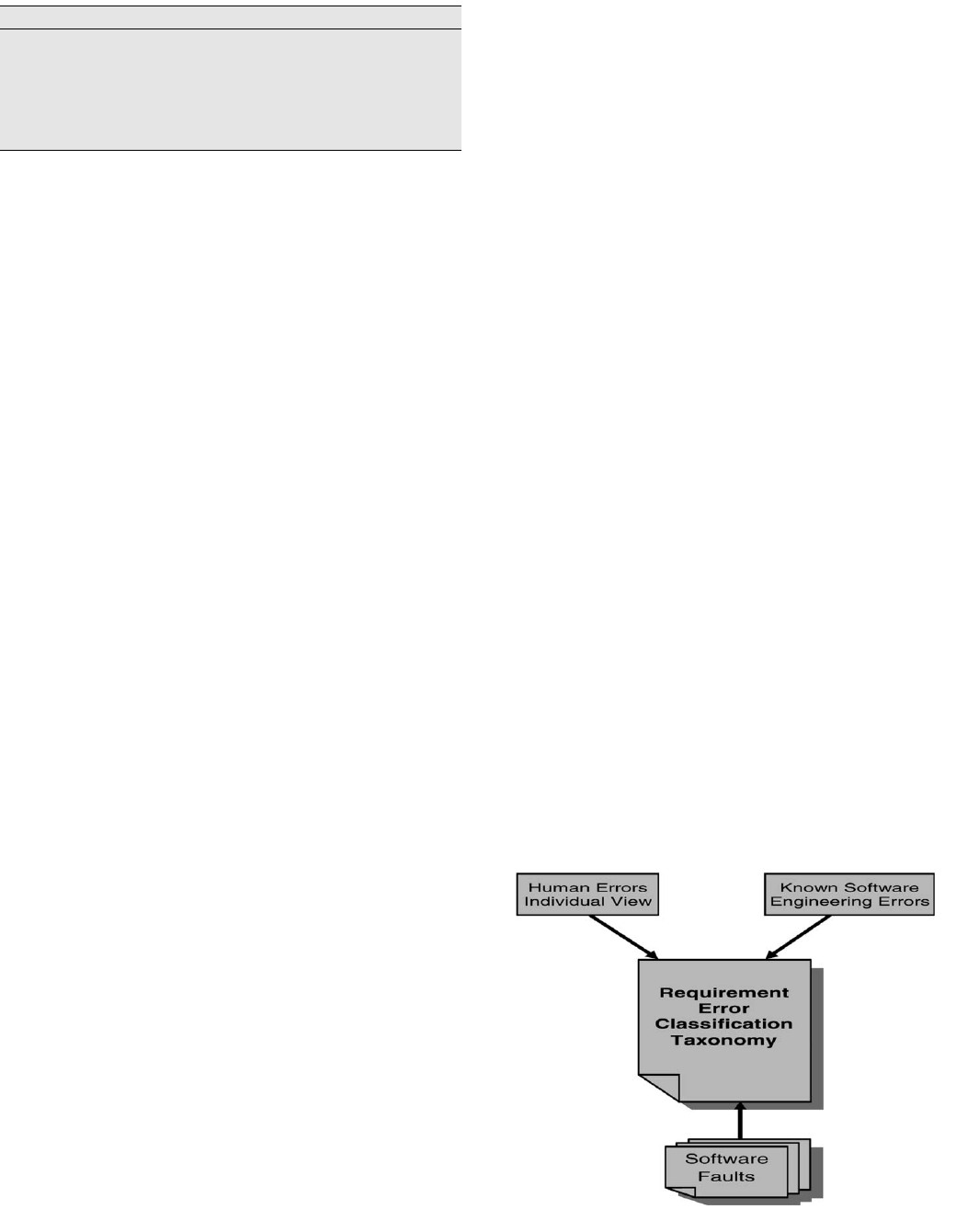

standing human errors. Fig. 1 shows that the results of a literature

search in software engineering about faults and their causes are

Table 1

Limitations of existing quality improvement approaches.

Focus on faults rather than errors

Inability to counteract the errors at their origin and uncover all faults

Lack of methods to help developers learn from mistakes and gain insights into

major problem areas

Inability of defect taxonomies to satisfy certain attributes, e.g., simplicity,

comprehensiveness, exclusiveness, and intuitiveness

Lack of a process to help developers identify and classify errors

Lack of a complete verification process

Fig. 1. Types of literature searched to identify the requirement errors.

G.S. Walia, J.C. Carver / Information and Software Technology 51 (2009) 1087–1109

1089

combined with the results of a literature search of the more gen-

eral psychological accounts of human errors to develop the

requirement error taxonomy.

The high-level research question was decomposed into three

specific research questions and sub-questions shown in Table 2,

which guided the literature review. The first question required

searching the software engineering literature to identify any qual-

ity improvement approaches that focus on errors. These ap-

proaches were analyzed to identify any shortcomings and record

information about errors. The second question also required

searching the software engineering literature with the purpose of

explicitly identifying requirement errors and errors from other life-

cycle phases (i.e., the design or coding phase) that are related to

requirement errors. In addition, this question also required analy-

sis of faults to identify the underlying errors. As a result, this ques-

tion identified a list of requirement errors from software

engineering literature. To answer the third question, we searched

the human error literature from the human cognition and psychol-

ogy fields to identify other types of errors that may occur while

developing a requirement artifact. This question involved analyz-

ing the models of human reasoning, planning, and problem solving

and their fallibilities to identify errors that can occur during the

requirements development stage. Finally, the fourth research ques-

tion, which is really a meta-question using the information from

questions 1 to 3, combines the errors identified from the software

engineering literature and the human cognition and psychology lit-

erature into a requirement error taxonomy.

3.2. Source selection and search

The initial list of source databases was developed using a de-

tailed source selection criteria as described below:

The databases were chosen such that they included journals and

conferences focusing on: software quality, software engineering,

empirical studies, human cognition, and psychology.

Multiple databases were selected for each research area: soft-

ware engineering, human cognition, and psychology.

The databases had to have a search engine with an advanced

search mechanism that allowed keyword searches.

Full text documents must be accessible through the database or

through other means.

Any other source known to be relevant but not included in one

of the above databases, were searched separately (e.g., Empirical

Software Engineering: An International Journal, text books, and

SEI technical reports).

The list of databases was reduced where possible to minimize

the redundancy of journals and proceedings across databases.

This list was then reviewed by a software engineering expert

and a cognitive psychology expert who added two databases, a text

book and one journal. The final source list appears in Table 3.

To search these databases, a set of search strings was created for

each of the three research questions based on keywords extracted

from the research questions and augmented with synonyms.

Appendix A provides a detailed discussion of the search strings

used for each research question.

The database searches resulted in an extensive list of potential

papers. To ensure that all papers included in the review were

clearly related to the research questions, detailed inclusion and

exclusion criteria were defined. Table 4 shows the inclusion and

exclusion criteria for this review. The inclusion criteria is specific

to each research question, while the exclusion criteria is common

for all questions. The process followed for paring down the search

results was:

(1) Use the title to eliminate any papers clearly not related to

the research focus;

(2) Use the abstract and keywords to exclude papers not related

to the research focus; and

(3) Read the remaining papers and eliminate any that do not

fulfill the criterion described in Table 4.

After selecting the relevant papers, a quality assessment was

performed in accordance with the guidelines published by the Cen-

ter for Reviews and Discrimination and the Cochrane Reviewers’

Handbook as cited by Kitchenham et al. (i.e., each study was as-

sessed for bias, internal validity, and external validity) [48]. First,

the study described in each paper was classified as either an exper-

iment or an observational study. Then, a set of quality criteria, fo-

cused on study design, bias, validity, and generalizability of results,

was used to evaluate quality of the study. The study quality assess-

ment checklist is shown in Table 5.

Using this process, the initial search returned over 25,000 pa-

pers, which were narrowed down to 7838 papers based on their ti-

tles, then to 482 papers based on their abstracts and keywords.

Table 2

Research questions and motivations.

Research question Motivation

1. Is there any evidence that using error information can improve software quality?

1.1. Are there any processes or methods reported in literature that use error information to improve soft-

ware quality?

1.2. Do any of these processes address the limitations and gaps identified in Section 2 (Table 1) of this

paper?

Assess the usefulness of errors in existing approaches;

identify shortcomings the current approaches and avenues

for improvement

2. What types of requirement errors have been identified in the software engineering literature?

2.1. What types of errors can occur during the requirement stage?

2.2. What errors can occur in other phases of the software lifecycle that are related to requirement errors?

2.3. What requirement errors can be identified by analyzing the source of actual faults?

Identify types of errors in the software engineering

literature as an input to an error classification taxonomy

3. Is there any research from human cognition or psychology that can propose requirement errors?

3.1. What information can be found about human errors and their classification?

3.2. Which of the human errors identified in question 3.1 can have corresponding errors in software

requirements?

Investigate the contribution of human errors from the

fields of human cognition and psychology

4. How can the information gathered in response to questions 1–3 be organized into an error taxonomy? Organize the error information into a taxonomy

Table 3

Source list.

Databases IEEExplore, INSPEC, ACM Digital Library, SCIRUS (Elsevier),

Google Scholar, PsychINFO (EBSCO), Science Citation Index

Other journals Empirical Software Engineering – An International Journal,

Requirements Engineering Journal

Additional

Sources

Reference lists from primary studies, Books, Software

Engineering Institute technical reports

1090 G.S. Walia, J.C. Carver / Information and Software Technology 51 (2009) 1087–1109

Then, these 482 papers were read to select the final list of 149 pa-

pers based on the inclusion and exclusion criteria.

Of these 149 papers, 108 were published in 13 leading journals

and six conferences in software engineering, and 41 were pub-

lished in nine psychology journals. The distribution of the selected

papers is shown in Table 6, including the number of papers from

each source along with the percentage of the overall total. In addi-

tion, three textbooks [69,62,73], provided additional information

about human errors that were also found in the journal and confer-

ence proceedings included in Table 6. The list of all included liter-

ature (journals, conferences proceedings, and textbooks) is

provided in Appendix C.

Based on the results from the quality assessment criteria de-

scribed in Table 5 (and discussed in more detail in Section 6), all

of the identified papers identified were of high-quality, providing

additional confidence in the overall quality of the set of selected

papers. In addition, all of the relevant papers that the authors were

aware of prior to the search [16,24,26,28,53,55,69] (i.e., papers that

were identified during the background investigation and before

commencing the systematic review) were located by the search

process during the systematic review, an indication of the com-

pleteness of the search. Also, all of the relevant papers from the ref-

erence lists of papers identified during the search were found

independently by the search process. Contrary to our expectations,

Table 6 shows that only a small number of papers appeared in the

Journal of Software Testing, Verification, and Reliability, in

the International Requirements Engineering Conference, and in

the Software Quality Journal. Because we expected these journals

to have a larger number of relevant papers, we wanted to ensure

there was not a problem with the database being used. Therefore,

we specifically searched these two journals again, but no addi-

tional relevant material was found.

Furthermore, in some cases a preliminary version of a paper

was published in a leading conference with a more complete jour-

nal paper following. In this case, only the journal paper was in-

cluded in the review thereby reducing the number of papers

from some conferences (e.g., preliminary work by Sutcliffe et. al.,

on the impact of human error on system requirements was pub-

lished in International Conference on Requirements Engineering,

but is not included in the review because of a later journal version

published in the International Journal of Human–Computer Inter-

action [80]).

3.3. Data extraction and synthesis

We used data extraction forms to ensure consistent and accu-

rate extraction of the important information from each paper re-

lated to the research questions. In developing the data extraction

forms, we determined that some of the information was needed

regardless of the research question, while other information was

specific to each research focus. Table 7 shows the data that was ex-

tracted from all papers. Table 8 shows the data that was extracted

for each specific research focus.

Table 5

Quality assessment.

Experimental studies Observational studies

1 Does the evidence support the

findings?

Do the observations support the

conclusions or arguments?

2 Was the analysis appropriate? Are comparisons clear and valid?

3 Does study identify or try to minimize

biases and other threats?

Does study uses methods to minimize

biases and other threats?

4 Can this study be replicated? Can this study be replicated?

Table 6

Paper distribution.

Source Count %

IEEE Computer 19 12.8

Journal of System and Software 13 8.7

Journal of Accident Analysis and Prevention 11 7.4

ACM Transactions on Software Engineering 11 7.4

Communications of the ACM 8 5.4

IBM Systems Journal 8 5.4

IEEE Transaction in Software Engineering 8 5.6

ACM Transactions on Computer–Human Interaction 6 4

Applied Psychology: An International Review 6 4

SEI Technical Report Website 5 3.4

Journal of Information and Software Technology 5 3.4

IEEE Int’l Symposium on Software Reliability Engineering 4 2.7

Journal of Ergonomics 4 2.7

IEEE Trans. on Systems, Man, and Cybernetics (A): Systems and

Humans

4 2.7

IEEE International Symposium on Empirical Software Engineering 4 2.7

Requirements Engineering Journal 4 2.7

International Conference on Software Engineering 3 2

Journal of Computers in Human Behavior 3 2

Empirical Software Engineering: An International Journal 3 2

The International Journal on Aviation Psychology 2 1.3

IEEE Annual Human Factors Meeting 2 1.3

International Journal of Human–Computer Interaction 2 1.3

Software Process: Improvement and Practice 2 1.3

Journal of Software Testing, Verification and Reliability 1 0.6

Journal of Reliability Engineering and System Safety 1 0.6

IEEE International Software Metrics Symposium 1 0.6

Journal of Information and Management 1 0.6

Software Quality Journal 1 0.6

High Consequence System Surety Conference 1 0.6

Crosstalk: The Journal of Defense Software Engineering 1 0.6

Journal of IEEE Computer and Control Engineering 1 0.6

Total 149 100

Table 4

Inclusion and exclusion criteria.

RQ Inclusion criteria Exclusion criteria

1 Papers that focus on analyzing/using the errors (source of faults) for improving soft-

ware quality

Empirical studies (qualitative or quantitative) of using the error information in soft-

ware lifecycle

Papers that are based only on expert opinion

Short-papers, introductions to special issues, tutorials, and mini-tracks

Studies not related to any of the research questions

Preliminary conference versions of included journal papers

Studies not in English

Studies whose findings are unclear and ambiguous (i.e., results are not

supported by any evidence)

2 Papers that talk about errors, mistakes or problems in the software development pro-

cess and requirements in particular

Papers about error, fault, or defect classifications

Empirical studies (qualitative or quantitative) on the cause of software development

defects

3 Papers from human cognition and psychology about human thought process, planning,

or problem solving

Empirical studies on human errors

Papers that survey or describe the human error classifications

G.S. Walia, J.C. Carver / Information and Software Technology 51 (2009) 1087–1109

1091

Consistent with the process followed in previous systematic re-

views (e.g., [49]), the first author reviewed all papers and extracted

data. Then, the second author independently reviewed and ex-

tracted data from a sample of the papers. We then compared the

data extracted by each reviewer for consistency. We found that

we had consistently extracted information from the sample of pa-

pers, suggesting that the second author did not need to review the

remainder of papers in detail and that the information extracted by

the first author was sufficient. The data extracted from all papers

was synthesized to answer each question as described in Section 4.

4. Reporting the review

4.1. Question 1: Is there any evidence that using error information can

improve software quality?

The idea of using the source of faults to improve software

quality is not novel. Other researchers have used this information

in different ways with varying levels of success. A review of the

different methods indicates that knowledge of the source of faults

is useful for process improvement, defect prevention by helping

developers learn from their mistakes and detection of defects

during inspections. A major drawback of these approaches is that

they typically do not provide a formal process to assist developers

in finding and fixing errors. In fact, only the approach by Lanubile

et al. provided any systematic way to use error information to im-

prove the quality of a requirements document [53]. Another

drawback to these methods is that they rely only on a sample

of faults to identify errors, therefore potentially overlooking some

errors.

While these approaches have positive aspects and describe soft-

ware errors (as discussed in the answers to 1.1), they also have lim-

itations (as discussed in the answers to 1.2). A total of 13 papers

addressed this question and its related sub-questions.

4.2. Question 1.1: Are there any processes or methods reported in

literature that use error information to improve software quality?

The review identified nine methods that stress the use of error

information during software development. These methods are de-

Table 7

Data items extracted from all the papers.

Data items Description

Identifier Unique identifier for the paper (same as the reference number)

Bibliographic Author, year, title, source

Type of article Journal/conference/technical report

Study aims The aims or goals of the primary study

Context Context relates to one/more search focus, i.e., research area(s) the paper focus on

Study design Type of study – industrial experiment, controlled experiment, survey, lessons learned, etc.

Level of analysis Single/more researchers, project team, organization, department

Control group Yes, no; if ‘‘Yes”: number of groups and size per group

Data collection How the data was collected, e.g., interviews, questionnaires, measurement forms, observations, discussion, and documents

Data analysis How the data was analyzed; qualitative, quantitative or mixed

Concepts The key concepts or major ideas in the primary studies

Higher-order

interpretations

The second- (and higher-) order interpretations arising from the key concepts of the primary studies. This can include limitations, guidelines or

any additional information arising from application of major ideas/concepts

Study findings Major findings and conclusions from the primary studies

Table 8

Data items extracted for each research focus.

Search focus Data item Description

Quality improvement approach Focus or process Focus of the quality improvement approach and the process/method used to improve quality

Benefits Any benefits from applying the approach identified

Limitations Any limitations or problems identified in the approach

Evidence The empirical evidence indicating the benefits of using error information to improve software quality and

any specific errors found

Error focus Yes or No; and if ‘‘Yes”, how does it relate to our research

Requirement errors Problems Problems found in requirement stage

Errors Whether the problems constitute requirement stage errors

Faults Faults at requirement stage and their causes

Mechanism Process used to analyze or abstract requirement errors

Error–fault–defect taxonomies Focus The focus of the taxonomy (i.e., error, fault, or failure)

Error focus Yes or No; if ‘‘Yes”, how does it relate to our research questions

Requirement phase Yes or No (whether it was applied in requirement phase)

Benefits Benefits of the taxonomy

Limitation Limitations of the taxonomy

Evidence The empirical evidence regarding the benefits of error/fault/defect taxonomy for software quality

Software inspections Focus The focus of inspection method (i.e., error, fault of failure)

Error focus Yes or No; if ‘‘Yes”, how does it relate to our research questions

Requirement phase Yes or No (Did it inspect requirement documents?)

Benefits Benefits of the inspection method

Limitation Limitations of the inspection method

Human errors Human errors and

classifications

Description of errors made by human beings and classes of their fallibilities during planning, decision

making and problem solving

Errors attributable Software development errors that can be attributable to human errors

Related faults Software faults that can be caused by human errors

Evidence The empirical evidence regarding errors made by humans in different situations (e.g., aircraft control)

that are related to requirement errors

1092 G.S. Walia, J.C. Carver / Information and Software Technology 51 (2009) 1087–1109

scribed in this section along with their use in software organiza-

tions, and the types of errors they helped identify.

The defect causal analysis approach is a team-based quality

improvement technique used to analyze samples of previous

faults to determine their cause (the error) in order to suggest

software process changes and prevent future faults. Empirical

findings show that the use of the defect causal analysis method

at IBM and at Computer Sciences Corporation (CSC) resulted in

a 50% decrease in defects over each two year period studied.

Based on the results from these studies, Card suggests that

all causes of software faults fall into one of four categories:

(a) methods (incomplete, ambiguous, wrong, or enforced), (b)

tools and environment (clumsy, unreliable, or defective), (c)

people (who lack adequate training or understanding), and

(d) input and requirements (incomplete, ambiguous, or defec-

tive) [24].

The software failure analysis approach is a team-based quality

approach. The goal of this process is to improve the software

development and maintenance process. Using this approach,

developers analyze a representative sample of defect data to

understand the causes of particular classes of defects (i.e., spec-

ification, user-interface, etc.). This process was used by

engineers at Hewlett–Packard to understand the cause of

user-interface defects and develop better user interface design

guidelines. During their next year-long project, using these

guidelines, the user-interface defects found during test

decreased 50%. All the causes of user-interface defects were

classified into four different categories: guidelines not fol-

lowed, lack of feedback or guidelines, different perspectives,

or oops! (forgotten) [41].

The defect causal analysis (using experts) approach accumulates

expert knowledge to substitute for an in-depth, per-object

defect causal analysis. The goal is to identify the causes of faults

found during normal development, late in the development

cycle and after deployment. Based on the results of a study,

Jacobs et al. describe the causes of software defects, e.g., inade-

quate unjustified trust, inadequate communication, unclear

responsibilities, no change control authority, usage of different

implementations, etc. The study also showed that the largest

numbers of defects were the result of communication problems

[46].

The defect prevention process uses causal analysis to deter-

mine the source of a fault and to suggest preventive actions.

Study results show that it helps in preventing commonly

occurring errors, provides significant reductions in defect rates,

leads to less test effort, and results in higher customer satisfac-

tion. Based on the analysis of different software products over

six years, Mays et al. classified defect causes as oversight

causes (e.g., developer overlooked something, or something

was not considered thoroughly), education causes (developer

did not understand some aspect), communication causes

(e.g., something was not communicated), or transcription

causes (developer knew what to do but simply made a mis-

take) [60].

The software bug analysis process identifies the source of bugs.

It also contains countermeasures (with implementation guid-

ance) for preventing bugs. To assess the usefulness of this

approach, a sample of 28 bugs found during the debug and test

phases of authorization terminal software development were

analyzed by group leaders, designers, and third party designers

to determine their cause. A total of 23 different causes were

identified, and more than half of these causes were related to

designers carelessness [61].

The root cause analysis method introduced the concept of a

multi-dimensional defect trigger to help developers determine

the root cause of a fault (the error) in order to identify areas

for process improvement. Leszak et al. conducted a case-study

of the defect modification requests during the development of

a transmission network element product. They found the root

causes of defects to include: communication problems or lack

of awareness of the need for communication, lack of domain/

system/tools/process knowledge, lack of change coordination,

and individual mistake [55].

The error abstraction process analyzes groups of related faults to

determine their cause (i.e., the error). This error information is

then used to find other related faults in a software artifact [53].

The defect based software process improvement analyzes faults

through attribute focusing to provide insight into their potential

causes and makes suggestions that can help a team adjust their

process in real time [24,59].

The goal-oriented process improvement methodology also uses

defect

causal

analysis for tailoring the software process to

address specific project goals in a specific environment. Basili

and Rombach describe an error scheme that classifies the cause

of software faults into different problem domains. These prob-

lem domains include: application area (i.e., misunderstanding

of application or problem domain), methodology to be used

(not knowing, misunderstanding, or misuse of problem solution

processes), and the environment of the software to be developed

(misunderstanding or misuse of the hardware or software of a

given project) [8].

Total quality management is a philosophy for achieving long-

term success by linking quality with customer satisfaction. Key

elements of this philosophy include total customer satisfaction,

continuous process improvement, a focus on the human side of

quality, and continuous improvement for all quality parameters.

It involves identifying and evaluating various probable causes

(errors) and testing the effects of change before making it [47].

This approach is based on the defect prevention process

described earlier.

Each of the above methods uses error information to improve

software quality. Each has shown some benefits and identified

some important types of errors. This information served as an in-

put to the requirement error taxonomy.

4.3. Question 1.2: Do any of these processes address the limitations

and gaps identified in Section 2 of this paper?

Each of the methods described in the answer to question 1.1

does address many of the limitations described in Table 1, but, they

still have some additional limitations. The limitations common to

the different methods are summarized in Table 9.

While these methods do analyze the source of faults, they only

use a small sample of faults, potentially overlooking some errors.

As a result, the error categories are generic and appear to be

incomplete. Moreover, some methods only describe the cause cat-

egories (and not actual errors), while others provide only a few

examples for each category. None of the methods provides a list

of all the errors that may occur during the requirements phase. An-

other common problem is that while many methods do emphasize

the importance of human errors and provide a few examples (e.g.,

errors due to designers carelessness and transcription errors), they

do not go far enough. These approaches lack a strong cognitive the-

ory to describe a more comprehensive list of the errors. Finally, no

formal process is available that can guide developers in identifying

the errors and resulting faults when they occur. The inability of the

G.S. Walia, J.C. Carver / Information and Software Technology 51 (2009) 1087–1109

1093

existing methods to overcome these limitations motivates the

need for development of more complete requirement error

taxonomy.

4.4. Question 2: What types of requirement errors have been identified

in the software engineering literature?

The identification of requirement errors will support future re-

search into software quality. To obtain an initial list of errors, the

software engineering literature was reviewed to extract any errors

that had been described by other researchers. This research ques-

tion is addressed in detail by sub-questions 2.1 and 2.2. A total

of 55 papers were analyzed to identify the types of requirement er-

rors in software engineering literature, 30 to address question 2.1

and 24 to address question 2.2. The complete list of all the require-

ment errors that were identified in the software engineering liter-

ature is provided in Appendix B.

4.5. Question 2.1: What types of errors can occur during the

requirement stage?

The review uncovered a number of candidates for requirement

errors. Table lists potential requirement errors along with the rel-

evant references.

The first row of Table 10 contains six different studies that ana-

lyzed faults found during software development using different

causal analysis methods (as described in question 1.1) to identify

requirement errors [16,24,41,46,60,61]. Examples of the errors

identified by these studies include: lapses in communications

among developers, inadequate training, not considering all the

ways a system can be used, and misunderstanding certain aspect

of the system functionality.

The second source of errors includes empirical studies that clas-

sify requirement errors problems experienced by developers, stud-

ies that classify difficulties in determining requirements, and other

case studies that trace requirement errors to the problems faced

during the requirements engineering process [13,21,42,77,81,82].

Examples of the errors from these studies include: lack of user par-

ticipation, inadequate skills and resources, complexity of applica-

tion, undefined requirement process, and cognitive biases.

The third source of errors include studies that describe the root

causes (some of whom were those belonging to requirement stage)

of troubled IT projects [7,39,76]. Examples of the errors from these

studies include: unrealistic business plan, use of immature tech-

nology, not involving user at all stages, lack of experienced or capa-

ble management, and improper work environment.

Table 10 also includes the findings from an empirical study of

13 software companies that identified 32 potential problem fac-

tors, and other similar empirical studies that identified require-

ment problem factors affecting software reliability [12,27,79,92].

Examples of the errors from these studies include: hostile team

relationships, schedule pressure, human nature (mistakes and

omissions), and flawed analysis method.

Table 10 also includes studies that determined the causes of

requirement traceability and requirement inconsistencies, errors

due to domain knowledge, requirement management errors, and

errors committed among team members during software develop-

ment [29,33,64,71]. Examples of the errors from these studies in-

clude: poor planning, insufficiently formalized change requests,

impact analysis not systematically achieved, misunderstandings

due to working with different systems, and team errors.

Finally, Table 10 contains ‘‘other errors” that includes studies

describing the root causes of safety-related software errors and

description of requirement errors causing safety-related errors as

well as studies that describe requirement errors based on the con-

tribution of human error from social and organizational literature

[56,57,87]. Examples of the errors from these studies include mis-

understanding of assumptions and interface requirements, misun-

derstanding of dependencies among requirements, mistakes

during application of well-defined process, and attention or mem-

ory slips. The complete list of requirement errors derived from the

sources listed in Table 10 is shown in Appendix B.

4.6. Question 2.2: What errors can occur in other phases of the

software lifecycle that are related to errors that can occur during the

requirements phase?

In addition to the errors found specifically in the requirements

phase, the review also uncovered errors that occur during the de-

sign and coding phases. These errors can also occur during the

requirements phase, including:

Missing information: Miscommunication between designers in

different teams; lack of domain, system, or environmental

knowled

ge;

or misunderstandings caused by working simulta-

neously with several different software systems and domains

[17,41].

Slips in system design: Misunderstanding of the current situation

while forming a goal (resulting in an inappropriate choice of

actions), insufficient specification of actions to follow for achiev-

ing goal (resulting in failure to complete the chosen actions),

Table 10

Sources used to identify requirement errors in the literature.

Sources of errors References

Root causes, cause categories, bug causes, defect–fault causes [16,24,41,46,60,61]

Requirement engineering problem classification [13,21,42,77,81,82]

Empirical studies on root causes of troubled projects or errors [7,39,76]

Influencing factors in reference stage and software

development

[12,27,79,92]

Causes of requirement traceability and requirement

inconsistency

[29,33,65,71]

Domain knowledge problems [65]

Management problems [29]

Situation awareness/decision making errors [33]

Team errors [71]

Other [40,56,57,87]

Table 9

Limitations of methods in question 1.1.

Limitations Methods

Requires extensive documentation of problem reports and inspection results; and over-reliance on historical data [24,50,47,55,59,60]

Cost of performing the causal analysis through implementing actions ranges from 0.5% to 1.5% of the software budget; and requires a startup

investment

[24,41,59,60]

It is cost-intensive, people-intensive and useful for analyzing only a small sample of faults, or group of related faults [8,24,41,46,59–61]

Requires experienced developers, extensive meetings among experts, and interviewing the actual developers to analyze the probable causes of faults [46]

Results in a large number of actions, and has to applied over a period of time to test the suggested actions/improvements to software process [55,59–61]

It can only detect the process inadequacy/process improvement actions and not reveal the actual error [47,59–61]

It analyzes the faults found late in the software lifecycle [46,59–61]

Does not guide the inspectors, and relies heavily on the creativity of inspectors in abstracting errors from faults during software inspections [53]

1094 G.S. Walia, J.C. Carver / Information and Software Technology 51 (2009) 1087–1109

using an analogy to derive a sequence of actions from another

similar situation (resulting in the choice of a sequence of actions

that is different from what was intended), or the sequence of

actions is forgotten because of an interruption [63,64].

System programs: Technological, organizational, historical, indi-

vidual, or other causes [31].

Cognitive breakdown: Inattention, over attention, choosing the

wrong plan due to information overload, wrong action, incorrect

model of problem space, task complexity, or inappropriate level

of detail in the task specification [50,51].

4.7. Question 2.3: What requirement errors can be identified by

analyzing the source of actual faults?

In addition to identifying requirement errors directly reported

in the literature, this review also identified a list of faults that could

be traced back to their underlying error [3,4,6,14,15,26,37,44,

68,70,72]. For example, in the case where a requirements docu-

ment is created by multiple sub-teams, a particular functionality

is missing because each sub-team thought the other one would in-

clude it. The error that caused this problem is the lack of commu-

nication among the sub-teams. Another example is the case where

two requirements are incomplete because they are both missing

important information about the same aspect of system function-

ality. The error that caused this problem is the fact that developers

did not completely understand that aspect of the system and it was

manifested in multiple places. These errors were added to the list

of errors that served as input to question 4. These errors include:

Misunderstanding or mistakes in resolving conflicts (e.g., there

are unresolved requirements or incorrect requirements that

were agreed on by all parties).

Mistakes or misunderstandings in mapping inputs to outputs,

input space to processes, or processes to output.

Misunderstanding of some aspect of the overall functionality of

the system.

Mistakes while analyzing requirement use cases or different sce-

narios in which the system can be used.

Unresolved issues about complex system interfaces or unantici-

pated dependencies.

4.8. Question 3: Is there any research from human cognition or

psychology that can propose requirement errors?

To address the fact that requirements engineering is a human-

based activity and prone to errors, the review also examined hu-

man cognition and psychology literature. The contributions of this

literature to requirements errors are addressed by sub-questions

3.1 and 3.2. A total of 32 papers that analyzed the human errors

and their fallibilities were used to identify errors that may occur

during software requirements phase.

4.9. Question 3.1: What information can be found about human errors

and their classification?

The major types of human errors and classifications identified

in human cognition and psychology include:

Reason’s classification of mistakes, lapses, and slips: Errors are clas-

sified as either mistakes (i.e., the wrong plan is chosen to accom-

plish a particular task), lapses (i.e., the correct plan is chosen, but

a portion is forgotten during execution), or slips (i.e., the plan is

correct and fully remembered, but during its execution some-

thing is done incorrectly) [23,31,64].

Rasmussen’s skill, rule and knowledge based human error

taxonomy: Skill based slips and lapses are cause by mistakes

while executing a task even though the correct task was cho-

sen. Rule and knowledge based mistakes occur due to errors

in intentions, including choosing the wrong plan, violating a

rule, or making a mistake in an unfamiliar situation

[23,63,40].

Reason’s general error modeling system (GEMS): A model of

human error in terms of unsafe acts that can be intentional

or unintentional. Unintentional acts include slips and lapses

while intentional acts include mistakes and violations

[23,35,63,78].

Senders and Moray’s classification of phenomenological taxonomies,

cognitive taxonomies, and deep rooted tendency taxonomies:

Description of the how, what and why concerns of an error,

including omissions, substitutions, unnecessary repetitions,

errors

based

on the stages of human information processing

(e.g., perception, memory, and attention), and errors based on

biases [23,78].

Swain and Guttman’s classification of individual discrete actions:

Omission errors (something is left out), commission errors

(something is done incorrectly), sequence errors (something is

done out of order), and timing errors (something is done too

early or too late) [83].

Fitts and Jones control error taxonomy: Based on a study of ‘‘pilot

error” that occur while operating aircraft controls. The errors

include: substitution (choosing the wrong control), adjustment

(moving the control to the wrong position), forgetting the con-

trol position, unintentional activation of the control, and inabil-

ity to reach the control in time [35].

Cacciabue’s taxonomy of erroneous behavior: Includes system and

personnel related causes. Errors are described in relation to exe-

cution, planning, interpretation, and observation along with the

correlation between the cause and effects of erroneous behavior

[23].

Galliers, Minocha, and Sutcliffe’s taxonomy of influencing factors for

occurrence of errors: Environmental conditions, management and

organizational factors, task/domain factors, and user/personnel

qualities including the slip and mistake types of errors described

earlier [40].

Sutcliffe and Rugg’s error categories: Operational description, cog-

nitive causal categories, social and organizational causes, and

design errors [81].

Norman’s classification of human errors: Formation of intention,

activation, and triggering. Important errors include errors in

classifying a situation, errors that result from ambiguous or

incompletely specified intentions, slips from faulty activation

of schemas, and errors due to fault triggering [62–64,66].

Human error identification (HEI) tool: Describes the SHERPA tool

that classifies errors as action, checking, retrieval, communica-

tion, or selection [74,78].

Human error reduction (HERA): Technique that analyzes and

describes human errors in air the traffic control domain. HERA

contains error taxonomies for five cognitive domains: percep-

tion and vigilance, working memory, long-term memory, judg-

ment, planning and decision-making, and response execution

[20,45].

4.10. Question 3.2: Which of the human errors identified in question

3.1 can have corresponding errors in software requirements?

Those errors that were relevant to requirements were included

in the initial list of errors that served as input to question 4 to make

it more comprehensive. Examples of the translation of these errors

into requirements errors are found in Table 11.

G.S. Walia, J.C. Carver / Information and Software Technology 51 (2009) 1087–1109

1095

4.11. Question 4: How can the information gathered in response to

questions 1–3 be organized into an error taxonomy?

Some of the errors were identified in more than one of the

bodies of literature surveyed: quality improvement approaches,

requirement errors, other software errors, and human errors. The

errors described in the answers to questions 1–3 were collected,

analyzed and combined into an initial requirement error taxonomy

with the objective of making the taxonomy simple and easy to use

yet comprehensive enough to be effective.

The development of the requirement error taxonomy consisted

of two step. First, the detailed list of errors identified during the lit-

erature search, and described in the answers to questions 1–3,

were grouped together into a set of detailed requirement error

classes. Second, the error classes were grouped into three high-

level requirement error types. These high-level error types were

also found during the literature search. The next section details

the process of developing the requirement error taxonomy and

how the taxonomy was constructed.

5. Process of developing the requirement error taxonomy

We organized the errors into a taxonomy that will guide future

error detection research with the intent of addressing limitations

in the existing quality improvement approaches. The errors identi-

fied from the software engineering and psychology fields were col-

lected, analyzed for similarities, and grouped into the taxonomy.

Errors that had similar characteristics (symptoms), whether from

software engineering or from psychology, were grouped into an er-

ror class to support the identification of related errors when an er-

ror is found. An important constraint while grouping the

requirement errors was to keep the error classes as orthogonal as

possible [87,88].

In Section 5.1, for each error class, we first describe the error

class. Then we provide a table that lists the specific errors from

the literature search that were grouped into that error class. Final-

ly, we give an example of an error from that class, along with a

fault likely to be caused by that error. The examples are drawn

from two sample systems. Due to space limitations, only a brief

overview of each example system is provided here.

Loan arranger system (LA): The LA application supports the busi-

ness of a loan consolidation organization. This type of organiza-

tion makes money by purchasing loans from banks and then

reselling those loans to other investors. The LA allows a loan

analyst to select a bundle of loans that have been purchased

by the organization that match the criteria provided by an inves-

tor. This criterion may include amount of risk, principal involved

and expected rate of return. When an investor specifies invest-

ment criteria, the system selects the optimal bundle of loans

that satisfy the criteria. The LA system automates information

management activities, such as updating loan information pro-

vided monthly by banks.

Automated ambulance dispatch system (AAD): This system sup-

ports the computer-aided dispatch of ambulances to improve

the utilization of ambulances and other resources. The system

receives emergency calls, evaluates incidents, issues warnings,

and recommends ambulance assignments. The system should

reduce the response time for emergency incidents by dispatch-

ing decisions based on recommendations made by system

[4,52].

5.1. Developing the requirement error classes

The requirement error classes were created by grouping errors

with similar characteristics. Tables 12–25 show each error class

along with the specific errors (from Software Engineering and Hu-

man Cognition fields) that make up that class.

Table 11

Requirement errors drawn from human errors.

Error Description

Not understanding the domain Misunderstandings due to the complex nature of the task; some properties of the problem space are not fully investigated;

and, mistaken assumptions are made

Not understanding the specific application Misunderstanding the order of events, the functional properties, the expression of end states, or goals

Poor execution of processes Mistakes in applying the requirements engineering process, regardless of its adequacy; out of order steps; and lapses on

the part of the people executing the process

Inadequate methods of achieving goals and

objectives

System-specific information was omitted leading to the selection of the wrong technique or process; selection of a

technique or process that, while successful on other projects, has not been fully investigated or understood in the current

situation

Incorrectly translating requirements to written

natural language

Lapses in organizing requirements; omission of necessary verification at critical points during the execution of an action;

repetition of verification leading to the repetition or omission of steps

Other human cognition errors Mistakes caused by adverse mental states, loss of situation awareness, lack of motivation, or task saturation; mistakes

caused by environmental conditions

Table 12

Communication errors.

Inadequate project communications [41]

Changes in requirements not communicated [46]

Communication problems, lack of communication among developers and

between developers and users

[60]

Communication problems [55]

Poor communication between users and developers, and between

members of the development team

[47]

Lack of communication between sub-teams [16]

Communication between development teams [13]

Lack of user communication [13]

Unclear lines of communication and authority [39]

Poor communication among developers involved in the development

process

[12,27]

Communication problems, information not passed between individuals [33]

Communication errors within a team or between teams [56]

Lack of communication of changes made to the requirements [56]

Lack of communication among groups of people working together [71]

Table 13

Participation errors.

No involvement of all the stakeholders [46]

Lack of involvement of users at all times during requirement

development

[47]

Involving only selected users to define requirements due to the

internal factors like rivalry among developers or lack of the

motivation

[39,40,87]

Lack of mechanism to involve all the users and developers together to

resolve the conflicting requirements needs

[24]

1096 G.S. Walia, J.C. Carver / Information and Software Technology 51 (2009) 1087–1109

The communication errors class (Table 12) describes errors that

result from poor or missing communications among the various

stakeholders involved in developing the requirements.

Example:

Error: Customer did not communicate that the LA system

should be used by between one and four users (loan analysts)

simultaneously.

Fault: Omitted functionality because the requirements specify

operations as if they are performed by only one user at a time.

The participation error class (Table 13) describes errors that re-

sult from inadequate or missing participation of important stake-

holders involved in developing the requirements.

Example:

Error: Bank lender, who was not involved in the requirements

process, wanted the LA application system to handle both fixed

rate loans and adjustable rate loans.

Table 14

Domain knowledge errors.

Lack of domain knowledge or lack of system knowledge [12,17,40,41,55,92]

Complexity of the problem domain [12,13,21,42]

Lack of appropriate knowledge about the application [27]

Complexity of the task leading to misunderstandings [40]

Lack of adequate training or experience of the requirement

engineer

[41]

Lack of knowledge, skills, or experience to perform a task [71]

Some properties of the problem space are not fully

investigated

[31]

Mistaken assumptions about the problem space [56]

Table 15

Specific application errors.

Lack of understanding of the particular aspects of the problem domain [60,65]

Misunderstandings of hardware and software interface specification [56]

Misunderstanding of the software interfaces with the rest of the system [56]

User needs are not well-understood or interpreted while resolving

conflicting requirements

[61]

Mistakes in expression of the end state or output expected [64]

Misunderstandings about the timing constraints, data dependency

constraints, and event constraints

[56,57]

Misunderstandings among input, output, and process mappings [70]

Table 16

Process execution errors.

Mistakes in executing the action sequence or the requirement

engineering process, regardless of its adequacy

[23,35,63,78]

Execution or storage errors, out of order sequence of steps and

slips/lapses on the part of people executing the process

[35,40,66,85]

Table 17

Other human cognition errors.

Mistakes caused by adverse mental states, loss of situation awareness [33,71,87]

Mistakes caused by ergonomics or environmental conditions [12]

Constraints on humans as information processors, e.g., task saturation [40]

Table 18

Inadequate method of achieving goals and objectives errors.

Incomplete knowledge leading to poor plan on achieving goals [40]

Mistakes in setting goals [40]

Error in choosing the wrong method or wrong action to achieve

goals

[50,51]

Some system-specific information was misunderstood leading to

the selection of wrong method

[33]

Selection of a method that was successful on other projects [23,69]

Inadequate setting of goals and objectives [76]

Error in selecting a choice of a solution [56]

Using an analogy to derive a sequence of actions from other similar

situations resulting in the wrong choice of a sequence of actions

[31,40,64,69]

Transcription error, the developer understood everything but

simply made a mistake

[60]

Table 19

Management errors.

Poor management of people and resources [27,71]

Lack of management leadership and necessary motivation [29]

Problems in assignment of resources to different tasks [13]

Table 20

Requirement elicitation errors.

Inadequate requirement gathering process [16]

Only relying on selected users to accurately define all the requirements [39]

Lack of awareness of all the sources of requirements [27]

Lack of proper methods for collecting requirements [13]

Table 21

Requirement analysis errors.

Incorrect model(s) while trying to construct and analyze solution [50,51]

Mistakes in developing models for analyzing requirements [8]

Problem while analyzing the individual pieces of the solution space [13]

Misunderstanding of the feasibility and risks associated with

requirements

[87]

Misuse or misunderstanding of problem solution processes [8]

Unresolved issues and unanticipated dependencies in solution space [17]

Inability to consider all cases to document exact behavior of the system [60]

Mistakes while analyzing requirement use cases or scenarios [8,74]

Table 22

Requirement traceability errors.

Inadequate/poor requirement traceability [13,42,76]

Inadequate change management, including impact analysis of

changing requirements

[29]

Table 23

Requirement organization errors.

Poor organization of requirements [41]

Lapses in organizing requirements [23]

Ineffective method for organizing together the requirements documented by

different developers

[8]

Table 24

No use of standard for documenting errors.

No use of standard format for documenting requirements [41]

Different technical standard or notations used by sub teams for documenting

requirements

[39]

Table 25

Specification errors.

Missing checks (item exists but forgotten) [16]

Carelessness while organizing or documenting requirement regardless of

the effectiveness of the method used

[7,8]

Human nature (mistakes or omissions) while documenting requirements [83,92]

Omission of necessary verification checks or repetition of verification

checks during the specification

[50,51]

G.S. Walia, J.C. Carver / Information and Software Technology 51 (2009) 1087–1109

1097

Fault: Omitted functionality as requirements only consider fixed

rate loans.

The domain knowledge error class (Table 14) describes errors

that occur when requirement authors lack knowledge or experi-

ence about the problem domain.

Example:

Error: Requirement author lacks knowledge about the rela-

tive priority of emergency types within the AAD domain.

Fault: The functionality is incorrect because the requirements

contain the wrong ambulance dispatch algorithm.

The specific application error class (Table 15) describes errors

that occur when the requirement authors lack knowledge about

specific aspects of the application being developed (as opposed

to the general domain knowledge).

Example:

Error: Requirement author does not understand the order in

which status changes should be made to the ambulances in

the AAD system.

Fault: The requirements specify an incorrect order of events,

the status of the ambulance is updated after it is

dispatched rather than before, leaving a small window of time

in which the same ambulance could be dispatched two times.

The process execution error class (Table 16) describes errors

that occur when requirement authors make mistakes while exe-