School of Engineering

Department of Electrical and Computer Engineering

Capstone Program Spring 2021

Senior year Design Projects

At a Glance…

S21-01

Title: Energy Disaggregation using NILM

Team Members: Lori Cheng, Elaina Heraty, Raul Mori, Jake Seary, Andrew

Tomlinson

Advisor: Dr. Hana Godrich

Keywords

REDD, Neural Networks, Non-Intrusive Load Monitoring, Energy Disaggregation, Autoencoders

Abstract

For this project we will be analyzing the topic of energy disaggregation through deep learning

techniques similar to those done by Mario Berges and Hanning Lenge in their paper BOLT [3].

To do this we will use the Reference Energy Disaggregation Data Set (REDD) as detailed in [1].

The purpose of energy disaggregation is to calculate the energy consumption of individual

appliances given the total aggregate power consumption of a system such as a house. The

process begins with preprocessing data consisting of power measurements for both whole

residential homes as well as individual appliances and devices within them. Once the data is

cleaned and initially modeled, we experiment with various neural networks such as

autoencoders and convolutional neural networks which will be fed the cleaned data. This

network should then output different waveforms for which we will then have to label according

to the appliances they represent. Once the inferred subcomponent waveforms are obtained

and the labels are made, we will then present a way to visualize these results in a user interface.

Ultimately, one will be able to obtain the power consumption of their home, visualize it, and

be able to make better energy choices off it. This work is based on Non-Intrusive Load

Monitoring (NILM) which seeks to obtain measurements on the loads of individual appliances

in a building while only measuring the total load of the system. This allows us to eliminate the

cost of installing multiple individual sensors to measure power consumption of single

appliances. Instead, we can have one single apparatus hooked up to the central power system,

and infer the subcomponents using deep learning.

S21-02

Title: Real-time Analytics of Hurricane Gliders

Team Members: Radhe Bangad, Matthew Chan, Brian DelRocini, Kinjal Patel, Jasmine

Philip

Advisor: Dr. Scott Glenn

Keywords

Hurricane Predictions, Machine Learning, Data Analytics, Mobile App Development, Web App

Development

Abstract

In 2014, the National Oceanic and Atmospheric Administration created a pilot project that

consisted of deploying underwater autonomous robots, called gliders, to monitor upper ocean

conditions. The information gathered by these gliders has been crucial to improving hurricane

intensity forecasts, a task that requires large amounts of data and has for the most part, eluded

hurricane specialists. While the gliders are autonomous during their assigned “missions”, they

still require pilots for directional instructions. Glider pilots must sift through large quantities of

data, usually in formats that must be parsed and converted to graphs for legibility and in various

file types that make code-sharing a challenge. Pilots must then manually input directions to the

gliders once they complete missions or have unexpectedly surfaced. Currently, pilots use a web

application to deal with these matters. However, as it translates to a poorly accessible mobile

application and the gliders could need pilot assistance at unpredictable times, there is need for

a more accessible method of pilot-to-glider communication. With close coordination and

direction from the Rutgers University Center for Ocean Observing Leadership (RUCOOL), this

project will consist of creating intuitive web and mobile applications to integrate various

datasets from the gliders and automate data analysis and communication with the gliders.

These applications will be tested with active glider pilots at various research facilities. We aim

to aid glider pilots in making better informed and quicker piloting decisions, alleviate their

pressure and increase accuracy via automation, and ultimately improve hurricane predictions

for general public safety.

S21-03

Title: Saving Energy in The 21st-Century Through Real-Time Appliance Monitoring

Team Members: Karan Parab, Aashish Shashidhara Bharadwaj, Srinjoy

DasMahapatra, Victor Abril

Advisor: Dr. Jorge Ortiz

Keywords

Occupancy detection; energy data; smart meters; machine learning techniques; Arduino

sensors.

Abstract

In this project, the challenging problem of occupancy detection in a domestic environment is

studied based on information gathered from HVAC (Heating, Ventilation & Air Conditioning)

data. The most popular machine learning techniques, along with their boosting versions, that

are utilized for occupancy detection use measurements of a PIR sensor for training purposes.

In order to evaluate information gained from electricity features and to reduce dataset sparsity,

while maintaining the performance of classification techniques, mutual information is used as

a feature extraction technique. In order to determine the most efficient parameter

combinations of machine learning techniques, we performed a series of Monte Carlo

simulations for each method and for a wide range of parameters. Our simulation results show

a superiority of Random Forest learning technique compared to the other classification

techniques with accuracy slightly over 80% and F-measure with almost 84%, respectively.

S21-04

Title: Posture Alert System

Team Members: Rebecca Golm, Disha Bailoor, Nabeel Saud, Shaan Kalola

Advisor: Dr. Anand Sarwate

Keywords

Posture, Alert System, Sensor Matrix, Machine Learning Algorithm, Frontend UI

Abstract

Many people spend most of their day sitting in a chair working on their computer. Keeping

good posture while sitting on a chair for long hours is important to prevent various health

issues. While there are current solutions for this problem, most of them require the user to

wear a device, which can feel intrusive and uncomfortable. Our solution to this problem is to

create a posture alert system that notifies the user when they need to fix their posture. We will

do this by creating a sensor pressure matrix to detect the user’s posture by their weight

distribution using a machine learning algorithm. This information will then be presented to the

user through a user interface.

Goal: Create a smart seat cushion that detects incorrect posture and sitting time to prevent

health issues caused by a sedentary lifestyle.

Impact: Help people with sedentary lifestyles make healthier choices in terms of posture and

screen exposure.

S21-05

Title: Wearable Fall Risk Detector

Team Members: Joseph Akselrod, Ritvik Biswas, Tyler Hong, Heather Wun

Advisor: Dr. Umer Hassan

Keywords

Discrete, Biomedical, Quantitative, Early Detection, Diagnostics

Abstract

This project intends to solve a growing problem of detecting elevated risk for falls in people

with various medical problems, advanced age, changes in medications, mental status, and

other factors. We design a discrete and non-invasive pressure sensing biosensor with the ability

to perform a “Timed Up-And-Go Test” commonly used by medical professionals in order to

quantitatively assess elevated risk for falls. Our solution is to be discrete enough where these

tests are performed discretely throughout the day and will not require supervision by any

medical professionals.

S21-06

Title: Charging Made Simple for You and the Economy

Team Members: Debendro Mookerjee, Thomas Darlington, Daniel Audino, Pratik

Patel

Advisor: Dr. Michael Caggiano

Keywords

Charging, electric scooters, environmentally friendly

Abstract

In this report we summarize the goals of our Capstone Project. Our project strives to improve

the way Veo’s electric scooters are charged by making battery charging simple, user-friendly,

inexpensive, and environmentally friendly. These electric scooters are found in many on

campus and off campus locations and are used by many residents and Rutgers students. We

find that the current method of charging these scooters is inefficient and we believe that

altering parts of the scooter design and shifting charging responsible from company to

consumer will benefit everyone. In this report we explain the theory behind our planned

alterations as well as explain how we plan to execute our ideas.

S21-07

Title: PiCamRU: An advanced dashcam that uses machine learning to improve driving

habits

Team Members: Abdullah Bashir, Nayaab Chogle, Nisha Bhat, Amali Delauney

Advisor: Dr. Bo Yuan

Keywords

Ml, R-PI

Abstract

We present our plan to come up with a more accessible solution to existing dashcams while

also expanding upon common features. Cloud storage and automatic file uploads should be

standard in this day and age, but it is hidden behind a paywall that comes with unnecessary

features. We have decided to use the Raspberry Pi Model 4B and we plan on implementing a

graphics user interface through an LCD touch screen module with the device. The first functions

to implement would be loop recording and file upload, followed by impact detection and event

tagging. Computer vision libraries and established machine learning algorithms will be used to

formulate a driving performance report. This will encourage the correction of negative driving

habits and should lower the risk of an accident occurring.

S21-08

Title: Bike Head-Up Display Equalizer (B.H.U.D-E)

Team Members: Andrew Ceng, Weibin Chen, Ian Chiang, Shamehir Raja

Advisor: Dr. Wade Trappe

Keywords

Arduino, Bluetooth, heads-up display, helmet, motorcycle, safety

Abstract

While cars have recently been enjoying a plethora of advances in crash prevention and safety,

most notably with Tesla and their A.I. autopilot and automatic braking features, but the same

could not be said for motorcycles. Over the past few decades, while deaths from car accidents

have steadily gone down thanks to improved safety designs and features, deaths from

motorcycle accidents have remained approximately constant. Thus, an incredible imbalance, in

terms of safety, exists between a car and a motorcycle. Backup cameras have become a staple

in nearly every modern car, whereas motorcyclists still require turning their head around

and/or taking off their helmet. Close proximity sensors have also entered the mainstream, since

they help alleviate blind spots and provide crucial information to the driver; however,

motorcycles have yet to see widespread usage. Clearly, there is a need to equalize this disparity,

which is what our group aims to do through the application of existing safety technology. By

implementing features such as a heads-up display (HUD) into a helmet and close proximity

sensors to the physical body of the vehicle, the rider will have more data available, which can

help them make informed decisions and possibly save their life.

S21-09

Title: Got it! Entity capture

Members: Joshua Hymowitz, Gautham Roni, Suraj Sanyal, Louis Shinohara

Advisor(s): Dr. Shahab Jalalvand, Nick Ruiz (Interactions)

Keywords

automatic entity capture, Zoom API, redaction, conversation mediator/virtual assistant,

WebRTC

Abstract

With the rapid public adoption of video calling technologies such as Zoom, Google Meet, and

Microsoft Teams, there is an increasing opportunity to provide business services between

customers and agents. This project aims to create a proof of concept design in which a

conversation mediator attends a customer support call and captures important information in

near-real time, using spoken language understanding. Similar to the Interactive Video-call

Response project, the student will learn how to interface with a platform based on WebRTC,

with the aim of capturing the customer and agents' audio. The conversation mediator will

extract important entities like product names, account numbers, etc, by interfacing with

existing spoken language understanding technology supported by Interactions. As a point of

differentiation, Got it! also aims to support live, automatic redaction of sensitive information

during video calls via a websocket stream of call audio sent to a natural language processor

(NLP).

S21-10

Title: Improvements to the Viability of Solar Panels in the

Field

Members: Nathaniel Glikman, Alexander Laemmle, Nicholas Meegan,

Bhargav Singaraju, Sukhjit Singh

Advisor(s): Dr. Michael Caggiano, Cameron Greene (L3Harris)

Keywords

Rotating Solar Panels, Photovoltaic Cells, Solar Sensor, Micro-controller, Rotating Photovoltaic

Cells, Solar Sensor, Microcontroller

Abstract

The capstone project, Improvements to the Viability of Solar Panels in the Field, aims to

increase the amount of sunlight received by a solar panel in the field each day. According to

statistics collected by Wholesale Solar, “Although your panels may get an average of 7 hours of

daylight a day, the average peak sun hours are generally around 4 or 5 [hours]”, where peak

sun hours offer the maximum amount of power received. The proposed capstone project

attempts to rectify this shortcoming of solar panel design by introducing a method to track the

sun throughout the course of the day which increases the power absorbed, and efficiency.

Traditional methods of solar panel rotation require the use of databases collected by agencies

such as NREL to provide the angle of rotation for the panel. However, this method is not always

accurate; manual calibration of the solar panel may be required through its tracking, and

accurate time and location data is critical. Instead, the proposed design utilizes a small solar

sensor; photovoltaic cells in a recessed box with shadows cast on them by the roof. Data

gathered from this allows the panel to point at the sun directly: this is the point where there is

approximately equal light on all quadrants. Using artificial intelligence to compare with

previous iterations of rotating the panel, adjustments can be made to the solar cell’s location

for increased efficiency.

S21-11

Title: Project LOUIS

Team Members: Sahil Patel, Darshan Singh, Luan Tran, Tan Ngo, Khanh Nguyen

Advisor: Dr. Kristin Dana

Keywords

Computer Vision, Machine Learning, Alzheimer's

Abstract

Project LOUIS is an in-home network designed for the use of Alzheimer's and dementia

patients. Project LOUIS offers action-based reminders to the patients based on their daily tasks

and current actions detected by the system. The network consists of multiple devices

strategically located around the house of the user in order to be able to collect and serve data

and reminders to the patient based on their needs and requests. The modules include a central

home module, with a speaker and microphone input to communicate with the user, as well and

the other modules. The other type of module is a camera module, consisting of a camera, which

sends and receives data to and from the home module for further processing. The modules will

also be tasked with the computations necessary for our implemented technologies, including

computer vision with object detection, as well as speech recognition and machine learning.

With these technologies, Project Louis will be able to actively search for the patient in certain

areas in the house and be able to offer reminders based on their location, as well as recognize

objects in the room. In addition to computer vision, Project LOUIS is based on interaction with

the patient, asking what tasks they would like to do, and be able to offer reminders based on

the tasks the user describes. Machine learning is implemented in this step to better tailor the

reminders to each patient, and their daily tasks. Finally, Project LOUIS will bring these together

with the use of the modules around the house, ensuring no tasks be left undone, and creating

a safer environment for the patients

S21-12

Title: Mobile EMR Software Applications

Team Members: Aniqa Rahim, Sabian Corrette, Sidonia Mohan, and Siddharth

Manchiraju

Advisor(s): Dr. Hana Godrich

Keywords

ndroid, iOS, MariaDB, ReactJS, React Native, XCode, telemedicine, mobile application,

veterinary hospital, EMR, interface, appointment, prescription

Abstract

Mobile EMR Software applications aim to fill a void in providing clear, quick, and easy

means for clients to monitor records for their animals, set up appointments, and make

payments all from their mobile device. Through implementation on both Android and

iOS devices, the mobile applications target providing a clean interface for the user that

is simple to understand and makes sense. The effectiveness of the project will cut back

on the need for clients to call the animal hospital ahead of time for any need dealing

with their animals, will provide a quicker service time for the users, and relieve the

hospital staff by freeing up the phone line. Overall, the applications aim to bring what

is previously already seen available for humans in our hospitals and adapt it to be used

in a setting for animal lovers.

S21-13

Title: Eagle-View: Realtime Onboard Monitoring in Agriculture for Weed Clusters

Members: Andrew Dass, Andrew Vincent, Virajbhai Patel, Harsh Desai, Jeffrey Samson

Advisor(s): Dr. Dario Pompili, Khizar Anjum

Keywords

Drones, Agriculture, Real-time monitoring, Convolutional Neural Network, GPU

Abstract

In agriculture, weeds are plants that cannot be cultivated. These weeds form clusters

around and steal valuable nutrition away from crops. In 2021, farmers spent on average

anywhere from $4,000 to $20,000 on chemicals to rid weed infestations. By using a

computer vision model that returns real-time results of weed cluster locations from the

aerial images taken by a drone, we coordinate a team of drones to monitor crop fields

and identify weed clusters while properly allocating each drone’s limited resources

such as battery life. By implementing a type of convolutional neural network model,

the Feature Pyramid Network (FPN), drones process images and specifically find weed

clusters in real-time. Since a drone’s in-built CPU cannot process a complex computer

vision model such as an FPN, a GPU is mounted on board the drones to run and process

images through the model to return real-time results. Drones have limited resources,

therefore the necessity of real-time results will be important in efficiently allocating

these resources when monitoring large acres of farmland.

S21-14

Title: Contagious Disease Health Monitor

Team Members: Shiwei Song, Krishna Patel, Josh Chopra, Marie Angelou Cabral,

Justice Jubilee

Advisor: Dr. Hana Godrich

Keywords

Medical, IoT, heart rate, montoring

Abstract

Our capstone project is to create a contagious disease health monitor that helps COVID-19

patients to be monitored using a remote IOT based health monitor system that allows a doctor

to monitor multiple covid patients through the internet. This remote will help face three main

issues- the issue in the fact that doctors need to constantly monitor patient health, the number

of patients to manage is increasing, and doctors themselves undergo a risk of infection for each

patient they manage. Our systems dashboard will be able to take into account the data from

our hardware sensors that provide patients heartbeat, temperature, and blood pressure

reading. By using sensors that include a heartbeat sensor, temperature sensor, and BP sensor,

we can then be able to pick-up real-time data live from patients. This data will be transferred

by connecting the hardware to Wi-Fi. Patient data will be available on an application that we

will develop where doctors will be able to monitor and treat their patients without putting

themselves at risk. Our solution will allow doctors to not only seamlessly monitor patients'

health without risk of infection, but they can expand their dashboard to include metrics for

over 500 patients at once. The system will also be mounted at the patient's bedside and will

constantly be transmitting live data to the dashboard so that doctors can always attend to an

individual once a certain metric is in the red. This fast paced, live public health monitor can

transform the COVID-19 landscape for the average American without the need of immediate

in-person checkups.

S21-15

Title: Register.io

Team Members: Rishab Ravikumar, Maxwell Legrand, Michael Giannella, Praveen

Sakthivel

Advisor: Daniel Arkins (BlackRock)

Keywords

micro-service, nodes, load-balancing, scalable, distributed systems, redesign, cloud

Abstract

egister.io is a redesign and overhaul of the existing web registration system used to enroll in

classes. Currently the system is prone to being overloaded when flooded with requests. Our

goal is to re-imagine this service as a set of distributed nodes in order to effectively manage

high-load usage and scale up or down as necessary.

S21-16

Title: nCharge - Solar Power Battery Bank

Members: Kush Patel, Mya Odrick, Myles Johnson, Jenna Krause

Advisor: Dr. Hana Godrich

Keywords

Renewable Energy, Power Electronics, Data Analytics, Hardware Design, Solar Power

Abstract

The main objective of our project is building a solar power battery bank that will reduce energy

consumption. We want to develop a modular power bank that can support charging devices

like cell phones to laptops, and if time allows bigger appliances like televisions, dishwashers,

and refrigerators. The power bank will connect to a mobile application, and the user will be

able to track how much energy is saved through using the power bank. They will also be able

to track when it's low on battery and peak times to charge the bank. We want to optimize our

users' everyday energy consumption, so as our world shifts to cleaner options, our users will

be able to adjust accordingly.

Hardware

Application

Application

S21-17

Title: QuickShift

Members: Swetha Angara, Ariela Chomski, Bracha ‘Brooke’ Getter, Neha Nelson and

Param Patel

Advisor(s): Dr. Hana Godrich and Andrew Levine (Commure)

Keywords

FHIR, React.js, Commure, Healthcare, Scheduling, Optimization, QuickShift, etc.

Abstract

Hospitals spend excessive time and money on scheduling their practitioners. This causes

frustration due the inefficiency and inability to access critical information in a timely manner.

The project objectives are: (1) Create a user-friendly web interface using Commure’s React

Components; (2) Create a robust back end using Commure’s FHIR-based APIs and

OptaPlanner’s scheduling algorithm.

QuickShift’s user flow starts when a practitioner logs in to their account, from there they can

see their work schedule and submit requests for days off with the priority of why they want

that day off. That request then shows up as pending on their calendar and gets sent to the

admin for approval. Once the deadline to submit requests has passed, admins can approve or

reject these to get started on generating a work schedule. The schedule gets generated with

feedback on the feasibility. If there is an issue the admin can manually change the constraints

and generate a new schedule. The generated schedule gets displayed on both the practitioner

and admin end.

S21-18

Title: Wireless Power Transfer

Members: Peter Wu, Andrew Simon, Naomie Popo, Crystal D’Souza

Advisor: Dr. Micheal Wu

Keywords

Inductor, rectifier, capacitor, resonance, power electronics, inductive coupling

Abstract

The purpose of this project is to create a wireless power transfer device capable of supplying

power to devices within either a two to one foot radius of the device. The project will consist

of largely two parts, an emitter which will transmit power via inductive resonant coupling and

a receiver which will collect the power and rectify the output voltage. Our aim is to wireless

transfer power to a light emitting diode.

The project consist of largely two parts: an emitter which will transmit power via inductive

resonant coupling and a receiver which will collect the power and rectify the output voltage.

S21-19

Title: ColorFind

Members: Kenny K. Bui, Jephtey Adolphe, Nishad Ramlakhan, Pavan Kunigiri

Advisor(s): Dr. Kristin Dana and Dr. Roy Yates

Keywords

application development, stress, java, america, adult, children, color

Abstract

To combat escalating stress levels and provide a generally engaging user experience, the idea

of Color Find was created. Color Find provides a novel, exciting, and calming way users can

color, different from the leagues of coloring applications that have come before it. In Color

Find, users find themselves discovering hidden images through color. The project uses

decomposed images and compiles layering data, so the user transverses the image one layer

at a time. Every layer is colored, but the user never sees the final, finished picture until they

have completed it. In terms of engineering, Color Find is broken into three main categories. The

algorithm responsible for image decomposition, the coloring UI, and software allowing for the

communication between the two. The image decomposition component inputs an image and

outputs layering data by identifying similar areas of the picture. Similar areas can be identified

based on color and proximity. The algorithm will favor explicit, cartoon flavored images

because they are relatively uncomplicated and have a wide appeal. The UI component

accommodates all ages and is intuitive and accessible even to young children. Therefore, there

will be relatively few screens to access, and the necessary coloring procedures such as switching

between colors will be made evident and easy to use. Also, Team 19 plans to take the project

even further and modernize the planned design by integrating database compatibility. The

database will allow for images to be stored online, and therefore unlock the amount of images

users will have access to.

S21-20

Title: CO

2

NSUME

Members: Samantha Moy, Shreya Patel, Atmika Ponnusamy, and Nandita Shenoy

Advisor: Dr. Jorge Ortiz

Keywords

sustainability, carbon tracking, machine learning, mobile application, image processing

Abstract

One of the most pressing issues of our time is the damage done to our earth’s atmosphere by

carbon emissions over the past several decades. The mobile application, CO

2

nsume

(pronounced like the word “consume”), aims to educate and empower college students so that

they are aware of their carbon footprint and able to make lifestyle choices that minimize

humanity’s impact on the earth’s atmosphere. CO

2

nsume utilizes machine learning algorithms

to identify foods via smartphone images and calculate the CO

2

emissions associated with

producing and transporting the foods. It also integrates university dining hall menus in order to

suggest more sustainable (and typically healthier) meals to students. Ultimately, CO

2

nsume

aims to raise student awareness of the environmental impacts of their eating habits, and

consequently, encourage a healthier, more sustainable lifestyle.

S21-21

Title: Tracking Cleaning Progress with Computer Vision

Members: Andrew Ko, Edler Olanday, Parth Patel, Piotr Zakrevski

Advisor: Dr. Yuqian Zhang

Keywords

public health, sanitation, augmented reality, object recognition/tracking

Abstract

The onset of the COVID era quickly garnered attention to the hazardous nature of microbes

spreading through common surfaces. With a possible lifetime of 72 hours, COVID-19 is capable

of quickly sickening any whoa approach or touch that surface. The reality of the situation

ushered a new drive-in individuals to disinfect general use surfaces. Maintenance teams are

expected to properly, regularly, and quickly disinfect surfaces between users. Computer vision

and augmented reality, along with a camera and a display, can be used to strengthen this trust

both ways. There are many applications in a mobile environment that use these approaches to

track objects and project information into the real world. These applications include SketchAR,

Snapchat filters, and multiple solutions in Google’s MediaPipe. Similar implementations can be

done to solve the issue of mass sanitation. By tracing the movement of a cleaning object (i.e.,

hand or glove) across a surface and the objects on that surface, a heatmap is created that shows

well a surface has been cleaned. This heatmap can then be displayed to maintenance staff to

keep track of how well they are cleaning a surface. Furthermore, this information can also be

shared with individuals who will use that area to verify how long ago and how well a surface

has been cleaned. As both the cleaner and the user know how well a surface has been sanitized,

both can be assured that neither are susceptible to infectious pathogens.

S21-22

Title: User Behavior Analytics

Members: Fares Elkhouli, Osama Trabelsi, Shardul Patel, Steve Hill, and Dimitriy

Zyunkin

Advisor: Dr. Yuqian Zhang

Keywords

cybersecurity, insider protection, user behavior analytics

Abstract

Cybersecurity has evolved from a mathematical abstraction of the 60s and 70s to being an

integral part of our everyday life. More and more, users are trusting corporations with sensitive

data such as PII (personal identifying information), medical records, and employment history.

Unfortunately, many conventional cybersecurity measures employed today are not well

equipped to deal with the ever-evolving landscape of attack vectors employed by malicious

intruders. Most cybersecurity tools used today such as firewalls, DNS servers, and proxies

operate under an understanding of a secure perimeter - the notion that once the external

components of the network are secure, the interior is safe from cyberattacks. This approach is

poorly equipped to deal with malicious insiders and compromised accounts within the domain.

Perimeter security tools offer no internal visibility into the domain, rendering the system

vulnerable to hackers who obtained login credentials of a certified user, or malicious insiders

who aim to obtain sensitive data from within. User Behavior Analytics (UBA) offers a novel

approach to detecting and preventing cyberattacks from within the domain. By collecting

metadata records on each user, UBA builds a model for each user in the domain and establishes

their respective ‘norm’. What are their normal working hours? How many files do they access

each day? What sort of custom scripts do they run from their account? What does their DNS

traffic look like? This metadata gives an understanding of the standard workflow of the user.

Any deviations from the normal user behavior are flagged by the UBA algorithm and preset

tasks are set in place in the event that a particular alert is triggered by a user.

S21-23

Title: Automated Fluorescent Microparticles Counting App

Members: Yongyu Xie, Yichen Fan, and Mufeng Zhu

Advisor(s): Dr. Umer Hassan

Keywords

smartphone-based fluorescent microscope, microparticles counting, image processing, mobile

application

Abstract

Fluorescent microscopes are widely used to observe fluorescent objects. However, traditional

benchtop fluorescent microscopes are limited to high cost and non-portability. Thus,

smartphone-based fluorescent microscopes appear, which capture images using smartphone

camera with the help of external optical device, and conduct image processing such us

microparticles counting on other computers. In order to fully take advantage of smartphone’s

CPU, we plan to develop an automated fluorescent microparticles counting app, where images

capturing, fluorescent microparticles counting and results displaying can be completely

conducted on the smartphone itself. Our desired app is based on the existing smartphone-

based fluorescent microscope design but can get rid of assistance of computers. Compared

with existing smartphone-based fluorescent microscopes, our app should be able to not only

capture and display images of fluorescent objects but also achieve microparticles counting

using the strong processor of smartphone. The core of the app is the image processing part and

our task is to achieve as high counting accuracy as possible.

S21-24

Title: Driver Attention Detection (DAD)

Members: Mazal Choudhury, Jianhong Mai, Kang Jun Lee, and Jonathan Ye

Advisor(s): Dr. Maria Striki

Keywords

Safety, Control, Awareness, Security, Trouble-free

Abstract

Background: Traffic accidents due to inattentive driving has cost many lives and will continue

to cause problems if not addressed. To address this problem, we have developed the Driver

Attention Detector to combat the problem, which will incorporate a camera in the car that

will be aided by computer vision to detect the driver’s eye movements and facial expressions

to examine whether the driver is paying attention to the road and remind the driver to focus

if needed.

The system will be implemented as an app installed on a smart android-based dashboard,

which will be connected to an Arduino and camera. The camera would then send visual data

to our software, which would incorporate computer vision using OpenCV to scan the users

face and detect their emotional state as well as their eye positions. This will allow us to detect

if the user is distressed, experiencing road rage, unresponsive, or simply not paying attention

and will deploy different measures accordingly.

S21-25

Title: Deep Learning Market Analysis Tool

Members: Alex Malynovsky, Adil Rashid, Aryaman Narayanan, Steven Negron, and

Rishabh Chari

Advisor: Dr. Roy Yates

Keywords

Deep Learning, Algorithms, Autonomous trading, Market Analysis

Abstract

Humans are prone to making mistakes, whether it be in their personal lives or on Wall Street.

Unlike computers, we are unable to process information the same way that computers can;

completely impartial and neutral. Today, this advantage is being utilized in a variety of ways on

the stock market in order to aid investors in turning a profit. However, a lot of the methods

being used include well-known algorithmic approaches tailored to the observation of certain

market indicators and increase ROI for the investor.

Our team aims to implement a machine learning algorithm that learns from the historic data of

various stock indices in order to make profiting predictions without any reinforcement (human

intervention). We plan on feeding historic stock market data, primarily the stock open, close,

volume and adjusted close, to generate predictions. We also plan on using QuantConnect to

backtest and paper trade our algorithm.

VaderLexicon

S21-26

Title: Solar Drone

Members: Sabbathina Agyei, Justin Rucker, Taranvir Singh, Juergen Benitez, Jagmeet

Singh

Advisor(s): Dr. Michael Caggiano

Keywords

Drone, Battery life, solar power, app dev

Abstract

People may not realize it, but the need for drones is growing before our eyes. The problem at

hand is how battery life is integrated into a drone whether it be a 100% solar drone or a

battery powered drone, battery life has always been an underlying issue when it comes to

drones. Our capstone team is proposing a fix to this problem by creating a design that

incorporates the mixture of both battery power and solar power to help catapult the drone to

new limits. Drones are expanding their abilities by being used in things such as filmmaking,

surveillance, industrial inspection, powerline inspection and roof inspection. These drones

can cost thousands. We are going to provide a cost-effective approach to high quality drones

and by designing our own application to contain the features of the drone ranging from things

like its camera, location, battery life and more.

S21-27

Title: Slide-Puzzle: Localized Repositioning of Adversarial Inputs

Members: Neel Amin, Nathaniel Arussy, Rizwan Chowdhury, Talya Kornbluth

Advisor: Dr. Saman Zonouz

Keywords

Machine learning, adversarial attacks

Abstract

The world of machine learning is rapidly growing, and with it comes an inherent danger of

possible attacks. From biometric scanners to self-driving cars, the world of image classification

is especially fraught with issues that could arise when a neural network does not function

correctly. Many papers have been written on possible attacks and countermeasures to ensure

safety. Our goal is to understand the possible threats and defenses, assess effectiveness of an

existing defense that uses input transformations (Guo et al. 2018[1]) using some newer attacks

and models. Input transformations are any change to an image that might change the pixel

arrangement or values that make it up, such as cropping and rescaling or changing colors. We

then can proceed to develop further ideas for defenses with given time. We hope that through

our research and assessments, we can find ways to improve on the pre-existing, or create new

effective efficient defensive techniques against adversarial attacks.

Our goal is to research the current edge of the adversarial machine learning field with the

ultimate goal of making some contribution to the field. Machine learning is becoming more

prevalent in all aspects of society. Applications of machine learning algorithms can be found in

instances ranging from security at airports to navigation systems for autonomous vehicles.

With widespread use of machine learning algorithms comes the risk of severe damage if the

machine learning models were compromised. Adversarial machine learning is the field

dedicated to the study of how weaknesses in machine learning algorithms can be exploited to

cause erroneous results or information leakage and in turn how to prevent such actions. Our

project will involve exploring different types of adversarial attacks and defenses as well as

gauging their performance against each other.

S21-28

Title: Mo’ Light, Mo’ Communications

Members: Collin Enright, Ceara Gagliano, Pablo M Hernandez Juarez, Anish Seth, John

Plaras

Advisor(s): Dr. Anand Sarwate

Keywords

RF, downlink, uplink, optics, communication, security.

Abstract

Radio communication has become a very cluttered atmosphere. With the current and

upcoming massive growth of electronics, means of communication are getting harder to

separate. An alternative for certain applications is optic communication – which is desirable

when a communicator has a line of sight with the target. Intercommunication and change

between light and RF can also cater to the need of communication for each specific device.

Light communication creates another level of security as you would have to be in line of sight

to intercept the transmission. Our approach will consist of a radio sending out a modulating

signal to a receiver within a black box, which will represent an RF uplink receiving module of an

antenna to optical converter. The signal received will be filtered to eliminate surrounding noise,

amplified to reach a level that the then laser board will be able to read. This laser board will

convert the RF signal to optical. The optical signal will be amplified, modulated, and transmitted

via a blue LED. The fact that we project to only perform this test effectively at a range below 5

feet blue will be a high enough frequency that the signal should carry effectively. This signal

will then be sent to our RF downlink module. After being received by our optical receiver we

will attenuate and demodulate the signal. Which will be converted back to RF amplified,

filtered, then transmitted via antenna to a second radio that will demodulate the signal and

make it audible

S21-29

Title: Mask and Temperature Recognition System (MATRS)

Members: Ansh Gambhir, Rishi Shah, Anurva Saste, Srinivasniranjan Nukala, Kyle Tran

Advisor: Dr. Umer Hassan

Keywords

COVID-19, Mask Identification, Temperature, Object Detection, Machine Learning

Abstract

Our project aims to reduce the rapid spread of COVID-19 by making sure people have an

acceptable temperature and are wearing suitable masks prior to entering a building. This will

be done by using machine learning and object detection combined with computer vision to see

if a mask can be identified on the user. An IR sensor will also be used to measure the candidate's

temperature. These electrical components will be connected to a Raspberry Pi, which will act

as our centralized component and main communications hub for our device. These

components will work in conjunction to make sure that the two aforementioned conditions are

met and will not let the user into the building until they are satisfied. Overall, the device we

intend to make will be a tremendous step towards reducing the spread of COVID-19, while also

spreading awareness of how it can be prevented.

S21-30

Title: Mobile Application for Speech Therapy

Members: Duc Nguyen, Samuel Minkin, George Soto, Gaurav Sethi

Advisor(s): Dr. Bo Yuan

Keywords

Deep Learning, Automatic Speech Recognition, Voice recognition, Mobile Application, Flutter,

Firebase

Abstract

There is a definite need for ways for those with issues pronouncing words to get help so they

can speak as smoothly as everybody else. Although there are several school programs meant

to resolve this issue, several times it is not enough. We will create a mobile application that

allows users to improve their speaking, reading, writing, and listening skills on their own. One

of the main features will record a user’s voice when he or she speaks and analyze the

pronunciation of each word using speech recognition. The analysis will display a resulting

view with the input either being designated as correct or wrong. Additionally, if the input is

determined to be incorrect, the user will be able to replay the input and play the correct

pronunciation. This will help them in discovering areas where they may have pronunciation

issues that need more practice. At the same time, this will help them with their reading,

listening, and speaking skills. Users will practice reading common words or phrases, speaking

them into the device, and then listening to their pronunciation, which will reinforce those

skills. Furthermore, we can add features for children to learn and play at the same time. This

application can also help children from around the world to learn English.

S21-31

Title: Occupancy Monitoring System with Computer Vision Algorithms

Members: Samantha Cheng, Kylie Chow, Sonia Hua, Sneh Shah

Advisor(s): Dr. Yuqian Zhang

Keywords

social distance, maximum capacity, cleanliness, computer vision, safety, occupancy sensor

Abstract

The Occupancy Monitoring System will keep track of the traffic in a small area to avoid

spreading infectious diseases and to keep these areas clean. Currently, many stores keep track

of the number of customers by stationing an employee by the doorway to monitor the statistics

manually. However, this process becomes increasingly inefficient in establishments such as

department stores where there are multiple entries, as well as clusters of people going in and

out at once. Furthermore, as indoor dining opens up, bathrooms are another important

entrance to monitor since the foot traffic directly relates to the sanitation quality. The data

collected can help store management determine how often the bathrooms should be cleaned

and can help notify customers if the bathroom is safe. The system will have a camera module

at the entrances of these areas that monitors incoming and outgoing traffic with the use of

computer vision. This data will be used to determine if the room/area needs cleaning or if the

maximum capacity of a room is exceeded. The camera would keep track of how many people

have been in this area and once the number passes a certain threshold, the management will

be notified that the bathroom needs to be cleaned. Customers would also be notified if the

area is safe to enter/if it has been cleaned recently. This system can help many indoor facilities

safely open back up since it would be closely regulating areas that encounter a large number

of people.

S21-32

Title: Post-fall Syndrome: A Sensor Based Solution

Members: Aravind Manivannan, Saikiran Nakka, Elizabeth Ward

Advisor: Dr. Umer Hassan

Keywords

Post-fall syndrome, sensors, machine learning, geriatric medicine, wearable technology

Abstract

Post-fall syndrome is a hesitancy or anxiety that an individual may develop after suffering a fall,

most commonly experienced by the elderly. This hesitancy leads to a change in the individual’s

walking patterns, whether consciously or not, which unfortunately tends to make them even

more likely to fall than before. Our project aims to help with this condition by creating a

wearable sensor device that will collect data about the user’s movement as they walk. We will

develop a machine learning algorithm to analyze the gait parameter data for optimality, and

the user will be able to view a report of the findings and advice on how they can improve their

walking via mobile application.

S21-33

Title: Tongue-to-Computer Interface

Team Members: Joe Shenouda, Peter Rizkalla, Fady Shehata, Matthew Hanna,

Andrew Rezk

Advisor: Dr. Yingying Chen

Keywords

Biosensors, Neuro Engineering, Machine Learning, Human Computer Interference

Abstract

The goal of this project is to provide a way for people to interact with their electronic devices

through their tongue movements. This would benefit people who have certain disabilities that

prevent them from interacting with their devices normally. In order to extract information

regarding tongue movement, certain bio sensors that can read EEG and EMG signals would be

used. A headset would be 3D printed in order to hold these sensors in place. The sensors would

be placed near the back of the ear that way it would be able to capture the EEG and EMG signals

accurately. The EEG signal contains information regarding brain activity while the EMG signal

contains information regarding muscular activity. Signal processing techniques can be carried

out in order to extract information regarding tongue movements from these signals. This

information can then be used to classify each type of tongue movement through the use of

some machine learning algorithms. Then, an app will be designed to take this information and

use it to control a device in a similar manner as to how the accessibility feature on a smartphone

would. Overall, this would allow a user to move their tongue and tap different teeth in a certain

way in order to communicate and control their device with ease solely from the movements of

their tongue.

S21-34

Title: SMART Glove

Members: Erik Castro, Brian Cheng, Nicholas Chu, Gary Qian, Thomas Luy

Advisor: Dr. Hana Godrich

Keywords

sign language, education, application development, wireless communication, sensors

Abstract

We want to make a SMART Glove that can recognize the position of the subject’s hand to

execute predetermined actions. Our specific focus on this project is to help people learn sign

language. This will be done by using the glove’s sensors as feedback to help users learn sign

language interactively. The glove will be able to detect the bend of every finger, rotation of the

hand, and acceleration of the hand. The glove will be wirelessly connected to a smartphone

app which will have sign language learning modules. The app will be split into two separate

sections, one for learning, and the other for testing the user on their sign language accuracy. In

the learning section, pictures will be provided for the user to mimic in order to move on to the

next letter. Feedback will be given if the fingers are not in the right position. The user would

also have the option to choose which letters to practice more. In the testing section, both

games and quizzes will be used to test the user’s sign language knowledge. We will use the

results of these tests to give feedback on the letters the user may be struggling to sign. Other

than sign language, this glove and app configuration could be set up for other creative functions

such as controlling your smartphone, giving PowerPoint presentations using gestures, and

directing remote controlled cars.

S21-35

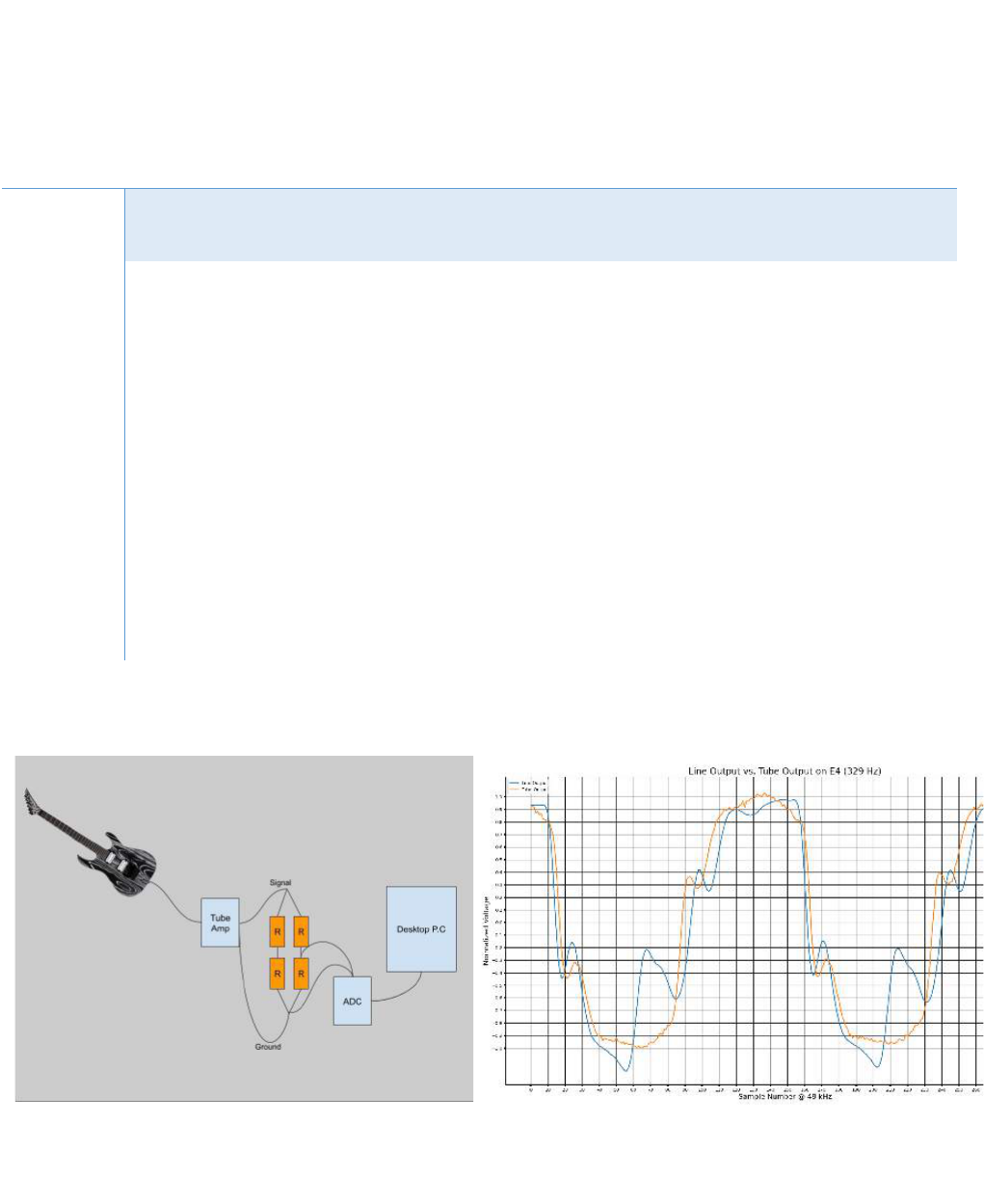

Title: A Machine Learning Based Model for Replicating Vacuum Tube Audio

Members: Nicholas Cooper, Joseph Florentine

Advisor(s): Dr. Dr. Rich Howard, Dr. Kristin Dana (Rutgers University), Dean Telson and

Dillion Houghton (L3Harris)

Keywords

Machine Learning, Guitar Amplification, Vacuum Tubes, Signal Processing, PCB design

Abstract

Despite being developed over 115 years ago, vacuum tubes remain at the forefront of guitar

amplification today. Their highly desirable overdrive characteristics and other subjective

auditory qualities have proved to be the gold standard for guitarists, particularly those involved

in genres such as Rock and Metal. However, vacuum tubes are power inefficient and fragile,

and must be replaced over time. Due to the inherent high impedance nature of vacuum tube

inputs and outputs, tubes require high voltages to operate. As a result, heavy transformers are

necessary for voltage supplies and output impedance matching, increasing the overall weight

significantly. Clearly, there are good reasons to want an amplifier that avoids these antiquated

technologies. Sadly, most solid-state amplifiers and software plugins fall short of this elusive

“tube sound”, as vacuum tubes are notoriously difficult to model. To this end, we propose a

novel method of emulating the vacuum tube in software, using machine learning algorithms.

We investigate the quantitative differences between current solid state and vacuum tube

amplifiers on real and synthetic signals, and experiment with several styles of neural networks.

We will implement our model on the Nvidia Jetson mobile computing platform, for integration

with a speaker cabinet, volume control, and equalization.

S21-36

Title: Viroid

Members: Karneet Arora, Guru Ragavendran Sivaram, Saagar Patel.

Advisor: Dr. Roy Yates

Keywords

Wi-Fi, Object Classification, COVID-19

Abstract

Inspired by the recent COVID-19 Pandemic, the ViRoid bot aims to help control the spread of

coronavirus while simultaneously gathering data in order to distinguish patterns, predict future

outbreak hotspots, evaluate demographics, and find out more information about the virus. The

bot is comprised of a fusion of multiple sensors into a portable package that scans any human

at entrances of businesses, events, homes, and/or any venue. Using sensors such as: infrared

temperature scanners, weight/height scales, we can accurately predict if someone is displaying

any symptoms of a disease. The robot would also employ self- sanitation mechanism which

would clean the robot after each use, so it’s sanitized and safe to be used again by another

person. Individuals who seem to display symptoms of the virus, like have a high temperature,

would be denied entry to the business or venue. All computations and sending/receiving data

would be handled with cloud technology using a server. There would also be a convenient

website for admins, showing the results for the day/week/month/year along with other quick

metrics at the tap of a hand. This website would also support a live feed of the robot doing its

work regarding testing the public. With a large-scale compatibility, this data can be used to

control spreads, allocate more resources in specific areas, and better understand the side

effects of any affliction

S21-37

Title: IoT Security for the Layman

Members: Ajay Vejendla, Jake Totland

Advisor(s): Dr. Wade Trappe

Keywords

IoT, Security,

Abstract

IoT devices are becoming an increasingly present item in the technologically connected

household. The home market is expected to grow from $24.8 Billion in revenue for 2020 to as

high as $108.3 billion in 2029. Despite this, there’s a distinct gap in low-cost security focused

IoT software and appliances, a niche that needs to be filled when coupled with the relative

insecurity of IoT devices currently, where even major companies like Ring have produced

insecure software in the past.

Current IoT gateways require lots of manual configuration and are too feature rich for people

without the requisite technology experience. The objectives of this project are to detect and

automatically quarantine compromised devices and alert user through accessible UI.

S21-38

Title: F-SCAN DS: Foot Splinter, Cut, and Nick Detection System for the Purpose of

Preventing Amputations in Diabetics

Members: Amber Haynes, Maria Rios

Advisor: Dr. Jorge Ortiz

Keywords

Biomedical, Image detection, 3D Printing, Machine Learning, Sensors, App Development

Abstract

With a substantial portion of the US population suffering from diabetes, we feel that it is

important for there to be a tool that will help patients identify any cuts that could turn life-

threatening. Since diabetics have a weakened immune system and neuropathy, they are prone

to cuts on their feet and often are unable to detect them. Our goal is to create an imaging

system that will help diabetics identify these cuts/injuries and relay that information to a

healthcare professional. This system will be split up into 3 major components. The first

component is the physical F-SCAN DS scanning device. The device will contain a hardware

mechanism that sends information to the Machine Learning (ML) System and the app. The ML

System, which is the second component, works to identify any cuts or injuries in the image. The

ML System then calls the app. The app, the third component, receives information from the ML

System regarding the type of injury and the location. One of the major constraints we face with

this project is assuming that the user already owns a smartphone. Additionally, the user would

need to own an Apple device since the app will only be compatible with the iOS system for the

time being. In the future, we hope to make the app compatible with Android. Information

regarding our project timeline can be found in a table at the end of the report.

S21-39

Title: M.A.G.E. – Mobile App Garden Experience

Members: Gregory Giovannini, Jikai LaPierre, Max Lightman

Advisor: Dr. Athina Petropulu

Keywords

horticulture, photo-diet, drip irrigation, Raspberry Pi, smart timer

Abstract

Beginner and intermediate gardeners are often unaware of how the quality of light plays just

as big a role as proper hydration to see effective plant growth. The proposed project is made

to meet the needs of beginner to intermediate gardeners by giving them access to a cheap,

easy-to-use gardening infrastructure. The primary objective of this project is to pair a user-

friendly mobile application with simple installable hardware for automating and expanding

one’s gardening experience. The user will be able to manage their plant care easily through the

application and hardware while still being able to improve their own skill by learning the

process of horticulture themselves through planting and harvesting. The proposed project

would use LED lights to provide a custom photo-diet for plants from selected light colors.

Additionally, the application will integrate with a drip irrigation system to provide automatic

watering for plants on a user-configurable timer. Similar automatic planters on the market

frequently require individual setup by pairing lighting systems with other water systems or vice-

versa. The proposed project will give an all-in-one home gardening experience that can be

monitored and controlled from a simple mobile app interface, and the height of the lights on

the roof will be adjustable through the app. Additional features can also be added to meet

more needs of indoor gardening and improve the experience, such as an automatic system to

adjust the height of the LED lights in sync with the plant’s growth without need for manual

adjustment through the app.

S21-40

Title: Smart Port Scooter

Members: Khalid Masuod, Arshad Vohra, Brendan Lindsey, Abdullah Nasir

Advisor: Dr. Hana Godrich

Keywords

storage, cloud, Wi-Fi, Bluetooth, app

Abstract

Living on a college campus in our generation, we are seeing a new wave of transportation take

over. So out with the old bikes and unreliable Rutgers Busses, and in with a new form of

transportation that integrates the next big technologies with traditional forms of

transportation, the smart E-Vehicle Kit. We wanted to tackle this transportation dilemma

ourselves by creating our own optimized, reliable prototype.

The objectives of the project are to Implement a program that connects port to Google Maps

API; Develop a port system with trackable ports that are visible on a scooter display which

provide a centralized drop off location for scooters; Successfully ping server upon detecting

movement; Report location data to a central server via a user’s cellular connection; and

implement discount system for returning vehicles

S21-41

Title: Cryptocurrency Sentiment Analysis Model

Members: Anmol Mynam, Nikhil Kumar, Yuhang Cao

Advisor: Dr. Emina Soljanin

Keywords

Crypto-Market Prediction Model, Deep Reinforcement Learning, Sentiment Analysis, NLP,

Quantitative Trading, Twitter, Reddit

Abstract

Using Twitter and Reddit, we will analyze user submissions to the respective sites. We will

organize a dataset of submissions, mentioning crypto, company name, or a response to

submission with one of these expressions. We will run the submissions through a program to

look for certain terms that indicate a sentiment. We will compute a table with crypto/company

names, and associated sentiment results for said crypto/company. This will take an aggregation

of those sentiments in conjunction with other parameters like time period, price movement,

and volumes of coins traded. Based on the aggregation of submissions, sentiment scores, and

other mentioned parameters, we will predict the trending of crypto in the near future. We will

train this prediction using deep reinforcement learning. By running our captured data through

an algorithm, we will compare it to past crypto market movement, and past social media

sentiment, to compare and train our algorithm. After our model has been trained we will

continue to tweak the parameters, and backtest it on current data to see how accurate we are.

In addition to the algorithm and data collection, we will also implement a bot that will buy or

sell crypto based on the recommendation of the algorithm. We will then test the algorithm and

see whether our bot turns our predictions into a profit or loss. After this, we will continue to

tweak our parameters for the algorithm and bot to optimize profit and better trades.

S21-42

Title: Capstone Management App

Members: Andrew Awad, Visshal Suresh, Nate Smith, Ketu Patel

Advisor: Dr. Bo Yuan

Keywords

Martial art, training, DensePose, Caffe2

Abstract

We are providing an affordable and safe way to stay in shape during this pandemic. Usually,

the Martial Arts trainers are hard to find and most people cannot afford it. We are providing a

stay-at-home alternate solution to train in the proximity of one’s own household. We are

providing an automated training system to train at home under the supervision of machine

learning algorithms. We are using high speed motors on a sturdy metal frame with a target

attached in the center that will use the camera input from the DensePose full body mapping

algorithm and project the trajectory of the movements of the trainee in the camera frame to

move the target to avoid getting hit. A solution like this has not yet been implemented. The

target audience would be UFC fighters, boxers and athletes that are trying to stay in shape and

trying to improve their target practice as well as work on their reflexes. This would serve as a

repetitive exercise to improve one’s ability according to personalized needs.

S21-43

Title: Fix My Mix

Members: Omar Faheem, Akshat Parmar, Gabriel Ajram

Advisor: Dr. Predrag Spasojevic

Keywords

TensorFlow, Heroku cloud, content based filtering

Abstract

A daily music recommendation service through a deep learning method. Currently music

platforms recommend tracks based on your previous listening history. Along with your history,

songs may be recommended based on what similar listeners are listening to. Our web

application, Fix My Mix, allows users to create an account and grant us access to their spotify

accounts. Using Spotipy, a python library for the spotify API, we are able to collect data such as

relevant time information and statistics regarding the composure of the music being streamed

by users. These data points serve as nodes in our model. Our model is based on Long Short

Term Memory (LSTM) Recurrent Neural Networks. Through user feedback and Spotify’s large

music streaming dataset, we are able to provide a continuous enhancing playlist. Hence the

project name, Fix My Mix, we are able to provide users with a more efficient listening period

through reducing the time spent shuffling through songs. Within the past month, Spotify has

received a patent for a similar concept regarding music recommendation based on emotion.

Spotify is using speech recognition to figure out what setting the user resides in. For example,

if Spotify hears you are in a party setting, it will recommend party songs. Unlike Spotify, users

need to be listening to music for our model to make consistent changes. Although collecting

more data points then may make our model more complex, it would allow for a much better

listening experience. Therefore, we have gone as far as breaking down every song that meets

our criteria as a successful “listen” to understand why users allowed the song to play over

others that were rapidly skipped or briefly listened to.

S21-44

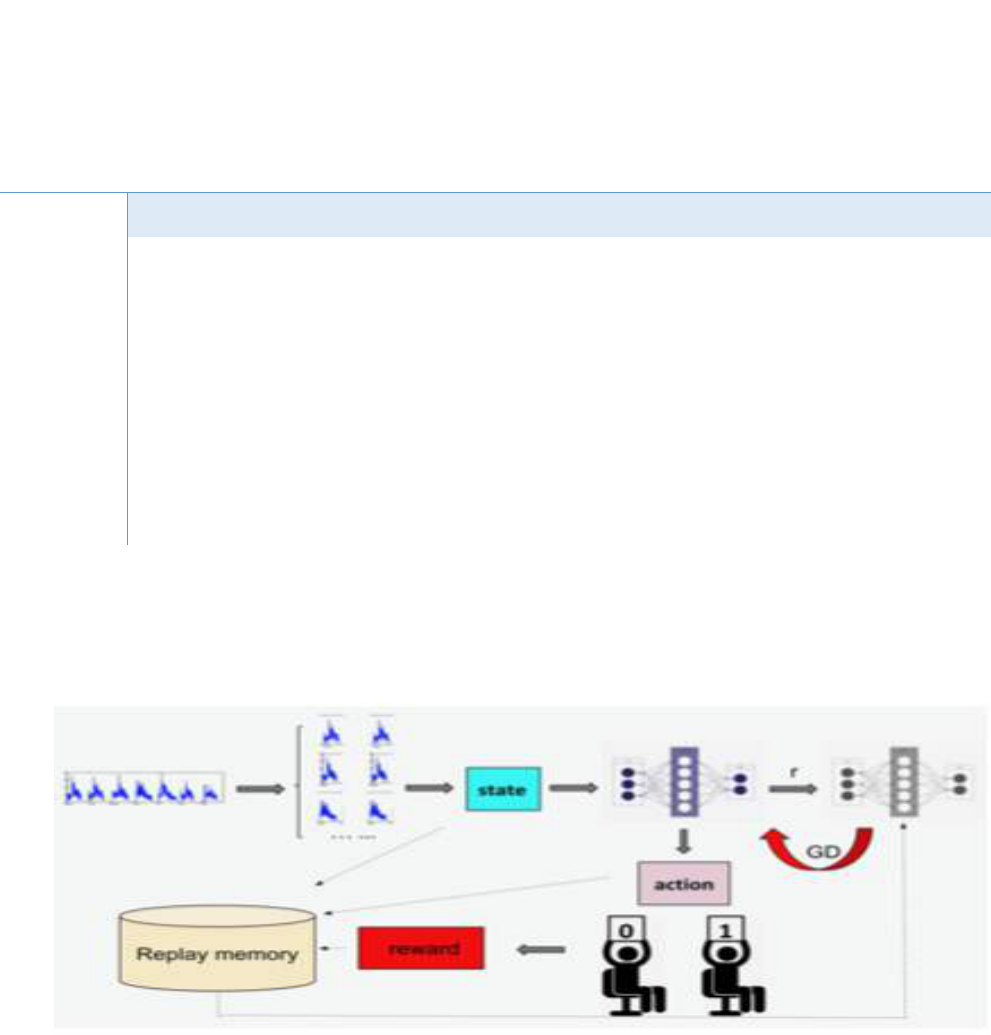

Title: RLAD: Time Series Anomaly Detection through Reinforcement Learning and

Active Learning

Members: Qizhen Ding

Advisors: Dr. Jorge Ortiz and Tong Wu

Keywords

Detection, Machine Learning

Abstract

Scalable data collection and labeling is a necessary feature for smart environment based on

ubiquitous sensing and computing. However, this is challenging in highly dynamic environment

where multitude of applications occur simultaneously as labels are costly and users can be

forgetful to real- time labeling. This work proposes Maestro, a data collection and labeling

platform that can sense ambient information in real-time, provide context to put less burden

on users for offline labeling, and can evolve as a system at scale to many users. We demonstrate

how Maestro, coupled with a web application to enable a user-centric labeling process, can be

used for quick deployment and data collection for machine learning enabled sensing

applications. Preliminary results show that we can achieve accuracy >95% for our applications

(occupancy counting and activity recognition) by using only <10% of labeled training data and

active learning.

S21-45

Title: RU-Therapy

Members: Khizer Humayun, Akash Govindaraju, Sianna Arruda, Rebekah Bediako, and

Hedaya Walter

Advisor: Dr. Hana Godrich

Keywords

therapy, virtual counseling, mental health, peer counseling, web application, Therapy-ball

Abstract

The project goal is to create a web-based/mobile application which will allow Rutgers students

to virtually attend counseling when needed. Due to covid-19, especially, in-person counseling

has become difficult to attend. Also, in normal conditions, at times it is difficult for students to

meet in-person with their counselors because the appointment times never line up with their

class schedule. Therefore, having a software application will allow students to attend

counseling anytime and from anywhere. The need of counseling is essential for one going

through anxiety and stress because it could lead to affecting their decision making. A student

dealing with one of these disorders can experience negative effects on their attention,

interpretation, concentration, memory, social interaction, and physical health. It can be difficult

for teachers to identify anxiety and depression because these disorders often show up

differently for different people, but therefore knowing the combinations of behaviors to look

for is key. Another important implementation in RU-therapy will be the peer-counseling. Due

to the tight schedule of counselors, it may become difficult for some students to attend even

virtual counseling sessions. Therefore, this issue will be addressed through peer-counseling.

The goal for designing RU- therapy is to encourage students to attend counseling and therapy

to promote optimal health around the campus.

S21-46

Title: Hydro-Homie

Members: Andrew Catalano, Erick Camerino, Davin Kim, Tom Markos, Isaac Tan

Advisor: Dr. Wade Trappe

Keywords

robotics, Arduino, automated, potted plants, sensors, RFID

Abstract

The Hydro-Homie is an automated robot that navigates a confined space. It will follow a

predetermined path and be able to go around or stop if an object or obstacle is blocking its

path. While it is navigating the area, the Hydro-Homie will water marked potted plants that are

in its path. It will use a water pump to take the water out of the tank, and the nozzle will be

able to move up or down so that it can water pots at different heights. Furthermore, we explain

the reasons to create such a tool and why certain pieces are necessary. We will discuss the

current plan on how the pieces of the Hydro-Homie will be tested and put together, and we

will discuss how the project will be split up.

S21-47

Title: Real-Time Drone Control via Single Outside Observe

Members: David Sukharenko, Bryan Zhu, Anthony Weiss, Kris Caceres, Roman Nikolin

Advisor: Dr. Predrag Spasojevic

Keywords

computer vision, object detection, distance/depth estimation, unmanned aerial vehicle (UAV),

drone

Abstract

In the past decade, drone usage has grown beyond the military sector and seen wide-ranging

commercial and civilian use. As drones and drone research grow in popularity, there is a

newfound need for a portable drone control system external to the drone itself. Current drone

positioning systems work well in controlled indoor environments, but lack the flexibility,

reliability, and portability that outdoor environments require. In addition, the variable nature

of piloting drones requires a skill that new users may not immediately have or have the time to

acquire. We propose a singular outside observer computer vision solution. An RGB-D camera

locates a drone within its viewport using object detection algorithms, and the drone’s

calculated position and orientation are used to control its flight patterns. Our solution allows

us to use a compact, portable computer vision system to fly a drone in an intuitive manner

without modifying or making additions to the drone itself. The initial prototype will use an Intel

RealSense D435i depth-sensing RGB camera attached to an NVIDIA Jetson Xavier NX for object

detection and depth measurement. UWB transmitters may be used to increase accuracy and

range of the latter. Object detection will be run with a real-time detector such as YOLO or

RetinaNet on Nvidia DeepStream. Once the drone is detected, controls will be sent to the drone

via radio. Our system will be tested in compliance with all local and FAA regulations regarding

recreational drone use.

dr

S21-48

Title: Active Noise Cancelling w/ Machine Learning

Members: Ryan Davis, Priya Parikh, Parth Patel

Advisor: Dr. Jorge Ortiz

Keywords

Machine learning (ML); active noise cancelling (ANC); adaptive filtering; neural networks;

smartphones

Abstract

The project we are pursuing applies machine learning techniques to improve digital adaptive

filtering algorithms for active noise cancelling (ANC) on smartphones. Active noise cancelling

is a process that uses additional sound signals to cancel out unwanted noise. Modern ANC

systems use adaptive filtering using recursive least squares (RLS) or least mean squares (LMS)

filters. The use of machine learning methods in ANC could improve its performance, as

modern neural networks are able to adapt to signals with very complex internal structure.

The goal is to leverage the computing power of smartphone systems for machine learning to

provide real time active noise cancellation. The different types of neural networks that will be

explored are long short-term memory networks (LSTM) and convolutional neural networks

(CNN) stacked with LSTM layers.

The features we expect to add that are unique from current noise cancellation applications

are the use of neural networks on resource constrained devices as well as providing ANC for

high frequency and complex signals. While the current scope of this project is limited due to

environmental constraints, the idea could improve ANC technology, better handle high

frequency signals, and potentially give or apply ANC capabilities to any headphones and audio

output. A possible future prospect is a mobile application that provides real time filtering on

any capable mobile device with or without headphones.

S21-49

Title: Mental Health Chatbot: KANA

Members: Jennifer Huang, Samuel Zahner, Nishad Nalgundwar, Vincent Chan

Advisor: Dr. Kristin Dana

Keywords

Artificial Intelligence, Machine Learning, Computer Vision, Mental Health

Abstract

Mental health involves the well-being of an individual’s mind, as well as an individual’s ability

to endure stressful events. While stressful events, irritation or frustration may build a

tolerance, it can also quickly deteriorate an individual’s ability to function at their highest

potential. People often overlook how mundane daily tasks serve as a stressor to our day-to-day

lives, thus leading to overwhelming negative feelings which will accumulate to have

detrimental effect(s) on an individual’s mental health. With our Artificial Intelligence Dialogue

system, individuals will now have a means to prevent the aggregation of obstructive feelings

and thoughts. Our system is designed to help individuals locate resources for their unique

situation through an emotionally aware virtual dialogue system. With this system in place,

anyone will be able to have access to comfort, companionship, as well as unbiased information

from accredited sources. While the system is not meant to be a replacement for therapy, it

provides people with a means to take a further step towards the positive growth of mental

health.

S21-50

Title: Covid_19 Face Mask Check System

Members: Nianyi Wang, Kebin Li

Advisors: Dr. Hubertus Franke (IBM) and Dr. Yuqian Zhang

Keywords

Deep Learning, Machine Learning, Image processing

Abstract

Due to the Covid_19, personal protection in the public area becomes much more important.

Everyone is required to wear a face mask to stop the transmission of the virus. However, it is

impossible for a human being to check if the face mask is up to standard due to thousands of

types of face masks. We will develop a system to detect the type of face mask Consumers just

have to stand in front of the camera, we can just take a picture of their face and put it into the

trained model to get the corresponding type of mask. If that mask matches the qualified face

mask type, our system will let the consumer in.

S21-51

Title: Potential Vulnerability in K8S

Members: Vulnerability Assessment, Exploitation, Information Gathering,

Reconnaissance Discovery and Scanning

Advisor: Dr. wade Trappe

Keywords

Vulnerability Assessment, Exploitation, Information Gathering, Reconnaissance Discovery and

Scanning

Abstract

In this project, we are mainly discovering all possible security vulnerabilities in a popular

commonly used K8S architecture. The work is split into at least 3 stages.

Containers are a big revolution in software development because they bring the production to

our local environment. No more worry about Linux or Windows compatibility. With containers,

all issues are easily reproducible in all workstations. Moreover, each environment is portable

with no extra effort. Developers have the power of package applications and this is good

because they know how the application should work. On the DevOps side, containers are

beautiful because each deployment system handles only one kind of artifact: containers.

Moreover, all build processes can be described in Dockerfile on the dev side, and this means

that you will use only one way to build things, the same in local dev and continuous integration.

We are going to research what is the most commonly used K8S architecture for small business.

And then reimplement it on a vmware ESXI server with 1 master node with at least 3 worker

nodes.

S21-52

Title: I.O.Clean

Members: Jonathan Banks, Edward Gaskin, Alex Martorano

Advisor(s): Dr. Kevin Lu (Stevens) and Dr. Hana Godrich

Keywords

Bacteria Detection, Automation, Cleaning, Disinfecting, Monitoring

Abstract

Hygiene is essential for health and safety, and yet can be difficult to manage. A survey

by the American Cleaning Institute found that 34% of respondents were concerned

about whether they were cleaning enough, and 31% were not sure they were

cleaning correctly. Users of real estate, public or private, owner or renter, would all

benefit from having an easy way to manage the cleanliness of their property.

I.O.Clean utilizes IoT smart technology to give users a dashboard on their phone to

help keep their spaces hygienic. The system is modular, which enables it to be easily

scaled, customized, and future-proofed. It is easy to forget about an invisible threat.

I.O.Clean smart devices work to assist in the management of cleanliness as well

automating certain cleaning processes within a work or living space. Utilizing I.O.Clean

deters virus and bacteria accumulation, and helps maintain the overall safety of

families, tenants, and employees occupying a space.

Business Model Canvas

S21-53

Title: Automatic monitor distance adjustment system

Members: Yuxiang Wang, Gaohaonan He

Advisor(s): Dr. Maria Striki

Keywords

Sensor, Javafx, Sliding Table, automation.