Contents

Preface vi

Appetizer: using probability to cover a geometric set 1

1 Preliminaries on random variables 5

1.1 Basic quantities associated with random variables 5

1.2 Some classical inequalities 6

1.3 Limit theorems 8

1.4 Notes 11

2 Concentration of sums of independent random variables 12

2.1 Why concentration inequalities? 12

2.2 Hoeffding’s inequality 15

2.3 Chernoff’s inequality 18

2.4 Application: degrees of random graphs 21

2.5 Sub-gaussian distributions 23

2.6 General Hoeffding’s and Khintchine’s inequalities 28

2.7 Sub-exponential distributions 31

2.8 Bernstein’s inequality 36

2.9 Notes 39

3 Random vectors in high dimensions 41

3.1 Concentration of the norm 42

3.2 Covariance matrices and principal component analysis 44

3.3 Examples of high-dimensional distributions 49

3.4 Sub-gaussian distributions in higher dimensions 55

3.5 Application: Grothendieck’s inequality and semidefinite programming 60

3.6 Application: Maximum cut for graphs 65

3.7 Kernel trick, and tightening of Grothendieck’s inequality 69

3.8 Notes 73

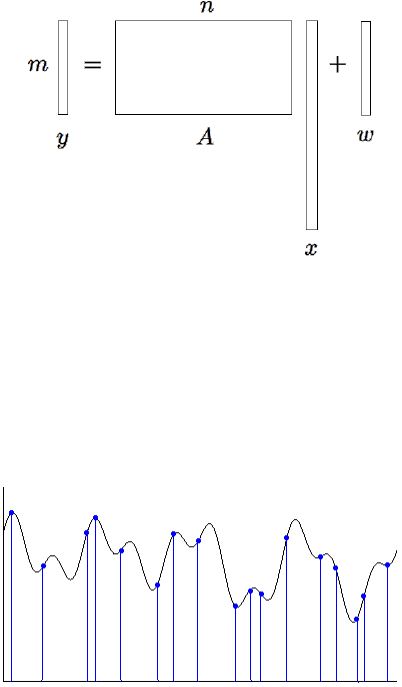

4 Random matrices 75

4.1 Preliminaries on matrices 75

4.2 Nets, covering numbers and packing numbers 80

4.3 Application: error correcting codes 85

4.4 Upper bounds on random sub-gaussian matrices 88

4.5 Application: community detection in networks 92

4.6 Two-sided bounds on sub-gaussian matrices 96

iii

iv Contents

4.7 Application: covariance estimation and clustering 99

4.8 Notes 102

5 Concentration without independence 104

5.1 Concentration of Lipschitz functions on the sphere 104

5.2 Concentration on other metric measure spaces 111

5.3 Application: Johnson-Lindenstrauss Lemma 117

5.4 Matrix Bernstein’s inequality 120

5.5 Application: community detection in sparse networks 128

5.6 Application: covariance estimation for general distributions 129

5.7 Notes 132

6 Quadratic forms, symmetrization and contraction 135

6.1 Decoupling 135

6.2 Hanson-Wright Inequality 139

6.3 Concentration of anisotropic random vectors 143

6.4 Symmetrization 145

6.5 Random matrices with non-i.i.d. entries 147

6.6 Application: matrix completion 149

6.7 Contraction Principle 152

6.8 Notes 155

7 Random processes 157

7.1 Basic concepts and examples 157

7.2 Slepian’s inequality 161

7.3 Sharp bounds on Gaussian matrices 168

7.4 Sudakov’s minoration inequality 171

7.5 Gaussian width 174

7.6 Stable dimension, stable rank, and Gaussian complexity 179

7.7 Random projections of sets 182

7.8 Notes 186

8 Chaining 189

8.1 Dudley’s inequality 189

8.2 Application: empirical processes 197

8.3 VC dimension 202

8.4 Application: statistical learning theory 215

8.5 Generic chaining 221

8.6 Talagrand’s majorizing measure and comparison theorems 225

8.7 Chevet’s inequality 227

8.8 Notes 229

9 Deviations of random matrices and geometric consequences 232

9.1 Matrix deviation inequality 232

9.2 Random matrices, random projections and covariance estimation 238

9.3 Johnson-Lindenstrauss Lemma for infinite sets 241

9.4 Random sections: M

∗

bound and Escape Theorem 244

9.5 Notes 248

Contents v

10 Sparse Recovery 250

10.1 High-dimensional signal recovery problems 250

10.2 Signal recovery based on M

∗

bound 252

10.3 Recovery of sparse signals 254

10.4 Low-rank matrix recovery 258

10.5 Exact recovery and the restricted isometry property 261

10.6 Lasso algorithm for sparse regression 267

10.7 Notes 271

11 Dvoretzky-Milman’s Theorem 273

11.1 Deviations of random matrices with respect to general norms 273

11.2 Johnson-Lindenstrauss embeddings and sharper Chevet inequality 277

11.3 Dvoretzky-Milman’s Theorem 278

11.4 Notes 283

Bibliography 284

Index 294

Preface

Who is this book for?

This is a textbook in probability in high dimensions with a view toward applica-

tions in data sciences. It is intended for doctoral and advanced masters students

and beginning researchers in mathematics, statistics, electrical engineering, com-

putational biology and related areas, who are looking to expand their knowledge

of theoretical methods used in modern research in data sciences.

Why this book?

Data sciences are moving fast, and probabilistic methods often provide a foun-

dation and inspiration for such advances. A typical graduate probability course

is no longer sufficient to acquire the level of mathematical sophistication that

is expected from a beginning researcher in data sciences today. The proposed

book intends to partially cover this gap. It presents some of the key probabilis-

tic methods and results that may form an essential toolbox for a mathematical

data scientist. This book can be used as a textbook for a basic second course in

probability with a view toward data science applications. It is also suitable for

self-study.

What is this book about?

High-dimensional probability is an area of probability theory that studies random

objects in R

n

where the dimension n can be very large. This book places par-

ticular emphasis on random vectors, random matrices, and random projections.

It teaches basic theoretical skills for the analysis of these objects, which include

concentration inequalities, covering and packing arguments, decoupling and sym-

metrization tricks, chaining and comparison techniques for stochastic processes,

combinatorial reasoning based on the VC dimension, and a lot more.

High-dimensional probability provides vital theoretical tools for applications

in data science. This book integrates theory with applications for covariance

estimation, semidefinite programming, networks, elements of statistical learning,

error correcting codes, clustering, matrix completion, dimension reduction, sparse

signal recovery, sparse regression, and more.

Prerequisites

The essential prerequisites for reading this book are a rigorous course in probabil-

ity theory (on Masters or Ph.D. level), an excellent command of undergraduate

linear algebra, and general familiarity with basic notions about metric, normed

vi

Preface vii

and Hilbert spaces and linear operators. Knowledge of measure theory is not

essential but would be helpful.

A word on exercises

Exercises are integrated into the text. The reader can do them immediately to

check his or her understanding of the material just presented, and to prepare

better for later developments. The difficulty of the exercises is indicated by the

number of coffee cups; it can range from easiest (K) to hardest (KKKK).

Related reading

This book covers only a fraction of theoretical apparatus of high-dimensional

probability, and it illustrates it with only a sample of data science applications.

Each chapter in this book is concluded with a Notes section, which has pointers

to other texts on the matter. A few particularly useful sources should be noted

here. The now classical book [8] showcases the probabilistic method in applica-

tions to discrete mathematics and computer science. The forthcoming book [20]

presents a panorama of mathematical data science, and it particularly focuses

on applications in computer science. Both these books are accessible to gradu-

ate and advanced undergraduate students. The lecture notes [212] are pitched

for graduate students and present more theoretical material in high-dimensional

probability.

Acknowledgements

The feedback from my many colleagues was instrumental in perfecting this book.

My thanks go to Florent Benaych-Georges, Jennifer Bryson, Lukas Gr¨atz, Remi

Gribonval, Ping Hsu, Mike Izbicki, Yi Li, George Linderman, Cong Ma, Galyna

Livshyts, Jelani Nelson, Ekkehard Schnoor, Martin Spindler, Dominik St¨oger,

Tim Sullivan, Terence Tao, Joel Tropp, Katarzyna Wyczesany, Yifei Shen and

Haoshu Xu for many valuable suggestions and corrections, especially to Sjoerd

Dirksen, Larry Goldstein, Wu Han, Han Wu, and Mahdi Soltanolkotabi for de-

tailed proofreading of the book, Can Le, Jennifer Bryson and my son Ivan Ver-

shynin for their help with many pictures in this book.

After this book had been published, many colleagues offered further sugges-

tions and noticed many typos, gaps and inaccuracies. I am grateful to Karthik

Abinav, Diego Armentano, Aaron Berk, Soham De, Hu Fu, Adam Jozefiak, Nick

Harvey, Harrie Hendriks, Joris K¨uhl, Jana Kr¨agel, Yi Li, Chris Liaw, Hengrui

Luo, Pete Morcos, Adrien Peltzer, Sikander Randhawa, Karthik Sankararaman,

Nils Siebken, Morten Thonack, and especially to Franz Haniel, Ichiro Hashimoto,

Aryeh Kontorovich, Jake Knigge, Wai-Kit Lam, Yi Li, Mark Meckes, Abbas

Mehrabian, Holger Rauhut, Michael Scheutzow, and Hao Xing who offered sub-

stantial feedback that lead to significant improvements.

The issues that are found after publication have been corrected in the electronic

version. Please feel free to send me any further feedback.

Irvine, California

May 20, 2024

Appetizer: using probability to cover a geometric set

We begin our study of high-dimensional probability with an elegant argument

that showcases the usefulness of probabilistic reasoning in geometry.

Recall that a convex combination of points z

1

, . . . , z

m

∈ R

n

is a linear combi-

nation with coefficients that are non-negative and sum to 1, i.e. it is a sum of the

form

m

X

i=1

λ

i

z

i

where λ

i

≥ 0 and

m

X

i=1

λ

i

= 1. (0.1)

The convex hull of a set T ⊂ R

n

is the set of all convex combinations of all finite

collections of points in T :

conv(T )

:

= {convex combinations of z

1

, . . . , z

m

∈ T for m ∈ N};

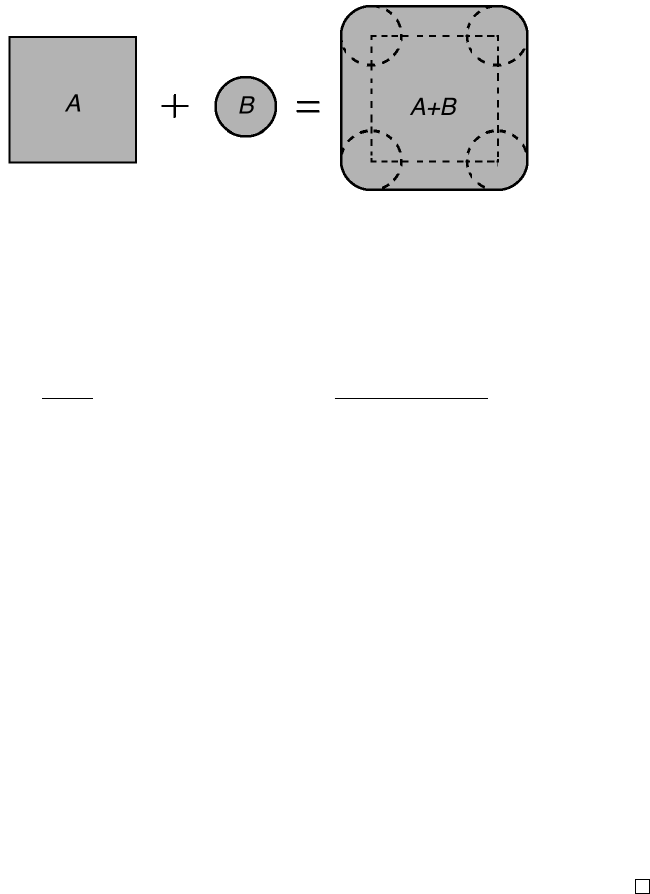

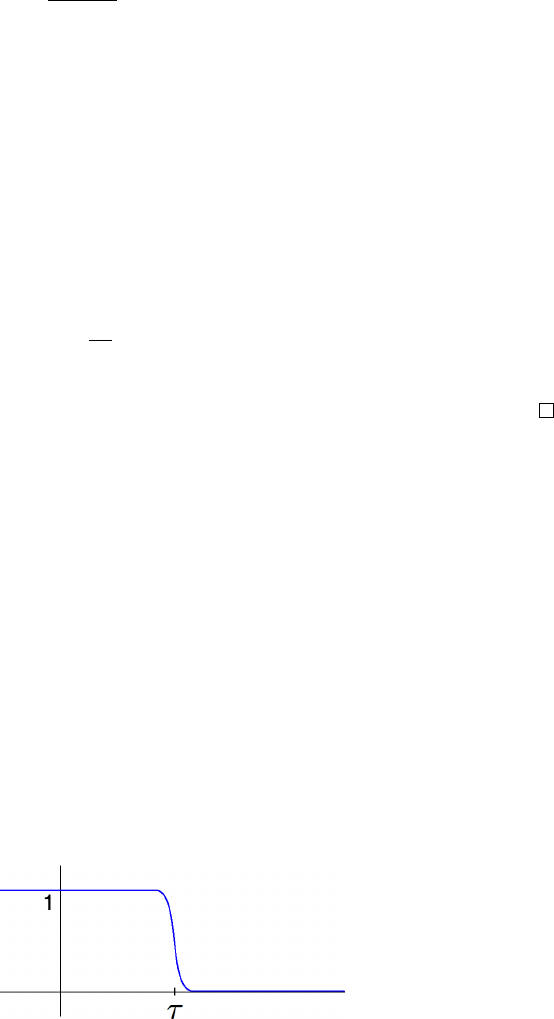

see Figure 0.1 for illustration.

Figure 0.1 The convex hull of a set of points representing major U.S. cities

The number m of elements defining a convex combination in R

n

is not restricted

a priori. However, the classical Caratheodory’s theorem states that one can always

take m ≤ n + 1.

Theorem 0.0.1 (Caratheodory’s theorem). Every point in the convex hull of a

set T ⊂ R

n

can be expressed as a convex combination of at most n + 1 points

from T .

The bound n + 1 can not be improved, as it is clearly attained for a simplex T

(a set of n + 1 points in general position). Suppose, however, that we only want

1

2 Appetizer: using probability to cover a geometric set

to approximate a point x ∈ conv(T ) rather than exactly represent it as a convex

combination. Can we do it with fewer than n + 1 points? We now show that this

is possible, and actually the number of required points does not need to depend

on the dimension n at all!

Theorem 0.0.2 (Approximate Caratheodory’s theorem). Consider a set T ⊂ R

n

whose diameter

1

is bounded by 1. Then, for every point x ∈ conv(T ) and every

integer k, one can find points x

1

, . . . , x

k

∈ T such that

x −

1

k

k

X

j=1

x

j

2

≤

1

√

k

.

There are two reasons why this result is surprising. First, the number of points

k in convex combinations does not depend on the dimension n. Second, the co-

efficients of convex combinations can be made all equal. (Note however that

repetitions among the points x

i

are allowed.)

Proof Our argument is known as the empirical method of B. Maurey.

Translating T if necessary, we may assume that not only the diameter but also

the radius of T is bounded by 1, i.e.

∥t∥

2

≤ 1 for all t ∈ T. (0.2)

Fix a point x ∈ conv(T ) and express it as a convex combination of some vectors

z

1

, . . . , z

m

∈ T as in (0.1). Now, interpret the definition of convex combination

(0.1) probabilistically, with λ

i

taking the roles of probabilities. Specifically, we

can define a random vector Z that takes values z

i

with probabilities λ

i

:

P

Z = z

i

= λ

i

, i = 1, . . . , m.

(This is possible by the fact that the weights λ

i

are non-negative and sum to

one.) Then

E Z =

m

X

i=1

λ

i

z

i

= x.

Consider independent copies Z

1

, Z

2

, . . . of Z. By the the strong law of large

numbers,

1

k

k

X

j=1

Z

j

→ x almost surely as k → ∞.

To get a quantitative form of this result, let us compute the variance of

1

k

P

k

j=1

Z

j

.

(Incidentally, this computation is at the heart of the proof of the weak law of large

numbers). We obtain

E

x −

1

k

k

X

j=1

Z

j

2

2

=

1

k

2

E

k

X

j=1

(Z

j

− x)

2

2

=

1

k

2

k

X

j=1

E ∥Z

j

− x∥

2

2

1

The diameter of T is defined as diam(T ) = sup{∥s − t∥

2

:

s, t ∈ T }. We assumed that diam(T ) = 1

for simplicity. For a general set T , the bound in the theorem changes to diam(T )/

√

k. Check this!

Appetizer: using probability to cover a geometric set 3

since E(Z

i

−x) = 0 for each i. The last identity is just a higher dimensional version

of the basic fact that the variance of a sum of independent random variables equals

the sum of variances; see Exercise 0.0.3 below.

It remains to bound the variances of the terms. We have

E∥Z

j

− x∥

2

2

= E ∥Z − E Z∥

2

2

= E ∥Z∥

2

2

− ∥E Z∥

2

2

(another variance identity; see Exercise 0.0.3)

≤ E ∥Z∥

2

2

≤ 1 (since Z ∈ T and using (0.2)).

We showed that

E

x −

1

k

k

X

j=1

Z

j

2

2

≤

1

k

.

Therefore, there exists a realization of the random variables Z

1

, . . . , Z

k

such that

x −

1

k

k

X

j=1

Z

j

2

2

≤

1

k

.

Since by construction each Z

j

takes values in T , the proof is complete.

Exercise 0.0.3. KK Check the following variance identities that we used in

the proof of Theorem 0.0.2.

(a) Let Z

1

, . . . , Z

k

be independent mean zero random vectors in R

n

. Show that

E

k

X

j=1

Z

j

2

2

=

k

X

j=1

E ∥Z

j

∥

2

2

.

(b) Let Z be a random vector in R

n

. Show that

E ∥Z − E Z∥

2

2

= E ∥Z∥

2

2

− ∥E Z∥

2

2

.

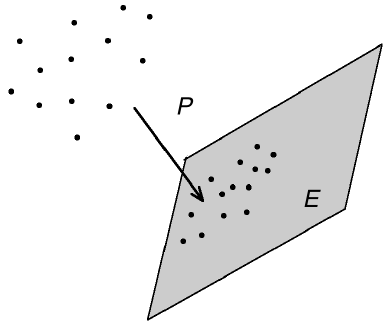

Let us give one application of Theorem 0.0.2 in computational geometry. Sup-

pose we are given a subset P ⊂ R

n

and ask to cover it by balls of given radius

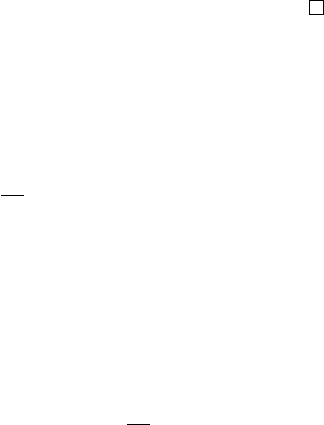

ε, see Figure 0.2. What is the smallest number of balls needed, and how shall we

place them?

Figure 0.2 The covering problem asks how many balls of radius ε are

needed to cover a given set in R

n

, and where to place these balls.

4 Appetizer: using probability to cover a geometric set

Corollary 0.0.4 (Covering polytopes by balls). Let P be a polytope in R

n

with

N vertices and whose diameter is bounded by 1. Then P can be covered by at

most N

⌈1/ε

2

⌉

Euclidean balls of radii ε > 0.

Proof Let us define the centers of the balls as follows. Let k

:

= ⌈1/ε

2

⌉ and

consider the set

N

:

=

1

k

k

X

j=1

x

j

:

x

j

are vertices of P

.

We claim that the family of ε-balls centered at N satisfy the conclusion of the

corollary. To check this, note that the polytope P is the convex hull of the set

of its vertices, which we denote by T . Thus we can apply Theorem 0.0.2 to any

point x ∈ P = conv(T ) and deduce that x is within distance 1/

√

k ≤ ε from

some point in N. This shows that the ε-balls centered at N indeed cover P .

To bound the cardinality of N, note that there are N

k

ways to choose k out of

N vertices with repetition. Thus |N| ≤ N

k

= N

⌈1/ε

2

⌉

. The proof is complete.

In this book we learn several other approaches to the covering problem when we

relate it to packing (Section 4.2), entropy and coding (Section 4.3) and random

processes (Chapters 7–8).

To finish this section, let us show how to slightly improve Corollary 0.0.4.

Exercise 0.0.5 (The sum of binomial coefficients). KK Prove the inequalities

n

m

m

≤

n

m

!

≤

m

X

k=0

n

k

!

≤

en

m

m

for all integers m ∈ [1, n].

Hint: To prove the upper bound, multiply both sides by the quantity (m/n)

m

, replace this quantity by

(m/n)

k

in the left side, and use the Binomial Theorem.

Exercise 0.0.6 (Improved covering). KK Check that in Corollary 0.0.4,

(C + Cε

2

N)

⌈1/ε

2

⌉

suffice. Here C is a suitable absolute constant. (Note that this bound is slightly

stronger than N

⌈1/ε

2

⌉

for small ε.)

Hint: The number of ways to choose an (unordered) subset of k elements from an N-element set with

repetition is

N+k−1

k

. Simplify using Exercise 0.0.5.

0.0.1 Notes

In this section we gave an illustration of the probabilistic method, where one

employs randomness to construct a useful object. The book [8] presents many

illustrations of the probabilistic method, mainly in combinatorics.

The empirical method of B. Maurey we presented in this section was originally

proposed in [166]. B. Carl used it to get bounds on covering numbers [49] including

those stated in Corollary 0.0.4 and Exercise 0.0.6. The bound in Exercise 0.0.6 is

sharp [49, 50].

1

Preliminaries on random variables

In this chapter we recall some basic concepts and results of probability theory. The

reader should already be familiar with most of this material, which is routinely

taught in introductory probability courses.

Expectation, variance, and moments of random variables are introduced in

Section 1.1. Some classical inequalities can be found in Section 1.2. The two

fundamental limit theorems of probability – the law of large numbers and the

central limit theorem – are recalled in Section 1.3.

1.1 Basic quantities associated with random variables

In a basic course in probability theory, we learned about the two most important

quantities associated with a random variable X, namely the expectation

1

(also

called mean), and variance. They will be denoted in this book by

2

E X and Var(X) = E(X − E X)

2

.

Let us recall some other classical quantities and functions that describe prob-

ability distributions. The moment generating function of X is defined as

M

X

(t) = E e

tX

, t ∈ R.

For p > 0, the p-th moment of X is defined as E X

p

, and the p-th absolute moment

is E |X|

p

.

It is useful to take p-th root of the moments, which leads to the notion of the

L

p

norm of a random variable:

∥X∥

L

p

= (E |X|

p

)

1/p

, p ∈ (0, ∞).

This definition can be extended to p = ∞ by the essential supremum of |X|:

∥X∥

L

∞

= ess sup |X|.

For fixed p and a given probability space (Ω, Σ, P), the classical vector space

1

If you studied measure theory, you will recall that the expectation E X of a random variable X on a

probability space (Ω, Σ, P) is, by definition, the Lebesgue integral of the function X

:

Ω → R. This

makes all theorems on Lebesgue integration applicable in probability theory, for expectations of

random variables.

2

Throughout this book, we drop the brackets in the notation E[f (X)] and simply write E f(X)

instead. Thus, nonlinear functions bind before expectation.

5

6 Preliminaries

L

p

= L

p

(Ω, Σ, P) consists of all random variables X on Ω with finite L

p

norm,

that is

L

p

=

n

X

:

∥X∥

L

p

< ∞

o

.

If p ∈ [1, ∞], the quantity ∥X∥

L

p

is a norm and L

p

is a Banach space. This

fact follows from Minkowski’s inequality, which we recall in (1.4). For p < 1, the

triangle inequality fails and ∥X∥

L

p

is not a norm.

The exponent p = 2 is special in that L

2

is not only a Banach space but also a

Hilbert space. The inner product and the corresponding norm on L

2

are given by

⟨X, Y ⟩

L

2

= E XY, ∥X∥

L

2

= (E |X|

2

)

1/2

. (1.1)

Then the standard deviation of X can be expressed as

∥X − E X∥

L

2

=

q

Var(X) = σ(X).

Similarly, we can express the covariance of random variables of X and Y as

cov(X, Y ) = E(X − E X)(Y − E Y ) = ⟨X − E X, Y − E Y ⟩

L

2

. (1.2)

Remark 1.1.1 (Geometry of random variables). When we consider random vari-

ables as vectors in the Hilbert space L

2

, the identity (1.2) gives a geometric inter-

pretation of the notion of covariance. The more the vectors X −E X and Y −E Y

are aligned with each other, the bigger their inner product and covariance are.

1.2 Some classical inequalities

Jensen’s inequality states that for any random variable X and a convex

3

function

φ

:

R → R, we have

φ(E X) ≤ E φ(X).

As a simple consequence of Jensen’s inequality, ∥X∥

L

p

is an increasing function

in p, that is

∥X∥

L

p

≤ ∥X∥

L

q

for any 0 ≤ p ≤ q ≤ ∞. (1.3)

This inequality follows since ϕ(x) = x

q/p

is a convex function if q/p ≥ 1.

Minkowski’s inequality states that for any p ∈ [1, ∞] and any random variables

X, Y ∈ L

p

, we have

∥X + Y ∥

L

p

≤ ∥X∥

L

p

+ ∥Y ∥

L

p

. (1.4)

This can be viewed as the triangle inequality, which implies that ∥·∥

L

p

is a norm

when p ∈ [1, ∞].

The Cauchy-Schwarz inequality states that for any random variables X, Y ∈ L

2

,

we have

|E XY | ≤ ∥X∥

L

2

∥Y ∥

L

2

.

3

By definition, a function φ is convex if φ(λx + (1 − λ)y) ≤ λφ(x) + (1 − λ)φ(y) for all t ∈ [0, 1] and

all vectors x, y in the domain of φ.

1.2 Some classical inequalities 7

The more general H¨older’s inequality states that if p, q ∈ (1, ∞) are conjugate

exponents, that is 1/p + 1/q = 1, then the random variables X ∈ L

p

and Y ∈ L

q

satisfy

|E XY | ≤ ∥X∥

L

p

∥Y ∥

L

q

.

This inequality also holds for the pair p = 1, q = ∞.

As we recall from a basic probability course, the distribution of a random vari-

able X is, intuitively, the information about what values X takes with what

probabilities. More rigorously, the distribution of X is determined by the cumu-

lative distribution function (CDF) of X, defined as

F

X

(t) = P

X ≤ t

, t ∈ R.

It is often more convenient to work with tails of random variables, namely with

P

X > t

= 1 − F

X

(t).

There is an important connection between the tails and the expectation (and

more generally, the moments) of a random variable. The following identity is

typically used to bound the expectation by tails.

Lemma 1.2.1 (Integral identity). Let X be a non-negative random variable.

Then

E X =

Z

∞

0

P

X > t

dt.

The two sides of this identity are either finite or infinite simultaneously.

Proof We can represent any non-negative real number x via the identity

4

x =

Z

x

0

1 dt =

Z

∞

0

1

{t<x}

dt.

Substitute the random variable X for x and take expectation of both sides. This

gives

E X = E

Z

∞

0

1

{t<X}

dt =

Z

∞

0

E 1

{t<X}

dt =

Z

∞

0

P

t < X

dt.

To change the order of expectation and integration in the second equality, we

used Fubini-Tonelli’s theorem. The proof is complete.

Exercise 1.2.2 (Generalization of integral identity). K Prove the following ex-

tension of Lemma 1.2.1, which is valid for any random variable X (not necessarily

non-negative):

E X =

Z

∞

0

P

X > t

dt −

Z

0

−∞

P

X < t

dt.

4

Here and later in this book, 1

E

denotes the indicator of the event E, which is the function that

takes value 1 if E occurs and 0 otherwise.

8 Preliminaries

Exercise 1.2.3 (p-moments via tails). K Let X be a random variable and

p ∈ (0, ∞). Show that

E |X|

p

=

Z

∞

0

pt

p−1

P

|X| > t

dt

whenever the right hand side is finite.

Hint: Use the integral identity for |X|

p

and change variables.

Another classical tool, Markov’s inequality, can be used to bound the tail in

terms of expectation.

Proposition 1.2.4 (Markov’s Inequality). For any non-negative random variable

X and t > 0, we have

P

X ≥ t

≤

E X

t

.

Proof Fix t > 0. We can represent any real number x via the identity

x = x1

{x≥t}

+ x1

{x<t}

.

Substitute the random variable X for x and take expectation of both sides. This

gives

E X = E X1

{X≥t}

+ E X1

{X<t}

≥ E t1

{X≥t}

+ 0 = t · P

X ≥ t

.

Dividing both sides by t, we complete the proof.

A well-known consequence of Markov’s inequality is the following Chebyshev’s

inequality. It offers a better, quadratic dependence on t, and instead of the plain

tails, it quantifies the concentration of X about its mean.

Corollary 1.2.5 (Chebyshev’s inequality). Let X be a random variable with

mean µ and variance σ

2

. Then, for any t > 0, we have

P

|X − µ| ≥ t

≤

σ

2

t

2

.

Exercise 1.2.6. K Deduce Chebyshev’s inequality by squaring both sides of

the bound |X − µ| ≥ t and applying Markov’s inequality.

Remark 1.2.7. In Proposition 2.5.2 we will establish relations among the three

basic quantities associated with random variables – the moment generating func-

tions, the L

p

norms, and the tails.

1.3 Limit theorems

The study of sums of independent random variables forms core of the classical

probability theory. Recall that the identity

Var(X

1

+ ··· + X

N

) = Var(X

1

) + ··· + Var(X

N

)

1.3 LLN and CLT 9

holds for any independent random variables X

1

, . . . , X

N

. If, furthermore, X

i

have

the same distribution with mean µ and variance σ

2

, then dividing both sides by

N

2

we see that

Var

1

N

N

X

i=1

X

i

=

σ

2

N

. (1.5)

Thus, the variance of the sample mean

1

N

P

N

i=1

X

i

of the sample of {X

1

, . . . , X

N

}

shrinks to zero as N → ∞. This indicates that for large N, we should expect

that the sample mean concentrates tightly about its expectation µ. One of the

most important results in probability theory – the law of large numbers – states

precisely this.

Theorem 1.3.1 (Strong law of large numbers). Let X

1

, X

2

, . . . be a sequence of

i.i.d. random variables with mean µ. Consider the sum

S

N

= X

1

+ ···X

N

.

Then, as N → ∞,

S

N

N

→ µ almost surely.

The next result, the central limit theorem, makes one step further. It identifies

the limiting distribution of the (properly scaled) sum of X

i

’s as the normal dis-

tribution, sometimes also called Gaussian distribution. Recall that the standard

normal distribution, denoted N(0, 1), has density

f(x) =

1

√

2π

e

−x

2

/2

, x ∈ R. (1.6)

Theorem 1.3.2 (Lindeberg-L´evy central limit theorem). Let X

1

, X

2

, . . . be a

sequence of i.i.d. random variables with mean µ and variance σ

2

. Consider the

sum

S

N

= X

1

+ ··· + X

N

and normalize it to obtain a random variable with zero mean and unit variance

as follows:

Z

N

:

=

S

N

− E S

N

p

Var(S

N

)

=

1

σ

√

N

N

X

i=1

(X

i

− µ).

Then, as N → ∞,

Z

N

→ N(0, 1) in distribution.

The convergence in distribution means that the CDF of the normalized sum

converges pointwise to the CDF of the standard normal distribution. We can

express this in terms of tails as follows. Then for every t ∈ R, we have

P

Z

N

≥ t

→ P

g ≥ t

=

1

√

2π

Z

∞

t

e

−x

2

/2

dx

as N → ∞, where g ∼ N(0, 1) is a standard normal random variable.

10 Preliminaries

Exercise 1.3.3. K Let X

1

, X

2

, . . . be a sequence of i.i.d. random variables with

mean µ and finite variance. Show that

E

1

N

N

X

i=1

X

i

− µ

= O

1

√

N

as N → ∞.

One remarkable special case of the central limit theorem is where X

i

are

Bernoulli random variables with some fixed parameter p ∈ (0, 1), denoted

X

i

∼ Ber(p).

Recall that this means that X

i

take values 1 and 0 with probabilities p and 1 −p

respectively; also recall that E X

i

= p and Var(X

i

) = p(1 − p). The sum

S

N

:

= X

1

+ ··· + X

N

is said to have the binomial distribution Binom(N, p). The central limit theorem

(Theorem 1.3.2) yields that as N → ∞,

S

N

− Np

p

Np(1 − p)

→ N(0, 1) in distribution. (1.7)

This special case of the central limit theorem is called de Moivre-Laplace theorem.

Now suppose that X

i

∼ Ber(p

i

) with parameters p

i

that decay to zero as

N → ∞ so fast that the sum S

N

has mean O(1) instead of being proportional to

N. The central limit theorem fails in this regime. A different result we are about

to state says that S

N

still converges, but to the Poisson instead of the normal

distribution.

Recall that a random variable Z has Poisson distribution with parameter λ,

denoted

Z ∼ Pois(λ),

if it takes values in {0, 1, 2, . . .} with probabilities

P

Z = k

= e

−λ

λ

k

k!

, k = 0, 1, 2, . . . (1.8)

Theorem 1.3.4 (Poisson Limit Theorem). Let X

N,i

, 1 ≤ i ≤ N, be independent

random variables X

N,i

∼ Ber(p

N,i

), and let S

N

=

P

N

i=1

X

N,i

. Assume that, as

N → ∞,

max

i≤N

p

N,i

→ 0 and E S

N

=

N

X

i=1

p

N,i

→ λ < ∞.

Then, as N → ∞,

S

N

→ Pois(λ) in distribution.

1.4 Notes 11

1.4 Notes

The material presented in this chapter is included in most graduate probabil-

ity textbooks. In particular, proofs of the strong law of large numbers (Theo-

rem 1.3.1) and Lindeberg-L´evy central limit theorem (Theorem 1.3.2) can be

found e.g. in [72, Sections 1.7 and 2.4] and [23, Sections 6 and 27].

Both Proposition 1.2.4 and Corollary 1.2.5 are due to Chebyshev. However,

following the established tradition, we call Proposition 1.2.4 Markov’s inequality.

2

Concentration of sums of independent

random variables

This chapter introduces the reader to the rich topic of concentration inequalities.

After motivating the subject in Section 2.1, we prove some basic concentration

inequalities: Hoeffding’s in Sections 2.2 and 2.6, Chernoff’s in Section 2.3 and

Bernstein’s in Section 2.8. Another goal of this chapter is to introduce two im-

portant classes of distributions: sub-gaussian in Section 2.5 and sub-exponential

in Section 2.7. These classes form a natural “habitat” in which many results

of high-dimensional probability and its applications will be developed. We give

two quick applications of concentration inequalities for randomized algorithms in

Section 2.2 and random graphs in Section 2.4. Many more applications are given

later in the book.

2.1 Why concentration inequalities?

Concentration inequalities quantify how a random variable X deviates around its

mean µ. They usually take the form of two-sided bounds for the tails of X − µ,

such as

P

|X − µ| > t

≤ something small.

The simplest concentration inequality is Chebyshev’s inequality (Corollary 1.2.5).

It is very general but often too weak. Let us illustrate this with the example of

the binomial distribution.

Question 2.1.1. Toss a fair coin N times. What is the probability that we get

at least

3

4

N heads?

Let S

N

denote the number of heads. Then

E S

N

=

N

2

, Var(S

N

) =

N

4

.

Chebyshev’s inequality bounds the probability of getting at least

3

4

N heads as

follows:

P

S

N

≥

3

4

N

≤ P

(

S

N

−

N

2

≥

N

4

)

≤

4

N

. (2.1)

So the probability converges to zero at least linearly in N .

12

2.1 Why concentration inequalities? 13

Is this the right rate of decay, or we should expect something faster? Let us ap-

proach the same question using the central limit theorem. To do this, we represent

S

N

as a sum of independent random variables:

S

N

=

N

X

i=1

X

i

where X

i

are independent Bernoulli random variables with parameter 1/2, i.e.

P

X

i

= 0

= P

X

i

= 1

= 1/2. (These X

i

are the indicators of heads.) De

Moivre-Laplace central limit theorem (1.7) states that the distribution of the

normalized number of heads

Z

N

=

S

N

− N/2

p

N/4

converges to the standard normal distribution N(0, 1). Thus we should anticipate

that for large N, we have

P

S

N

≥

3

4

N

= P

Z

N

≥

q

N/4

≈ P

g ≥

q

N/4

(2.2)

where g ∼ N(0, 1). To understand how this quantity decays in N, we now get a

good bound on the tails of the normal distribution.

Proposition 2.1.2 (Tails of the normal distribution). Let g ∼ N(0, 1). Then for

all t > 0, we have

1

t

−

1

t

3

·

1

√

2π

e

−t

2

/2

≤ P

g ≥ t

≤

1

t

·

1

√

2π

e

−t

2

/2

In particular, for t ≥ 1 the tail is bounded by the density:

P

g ≥ t

≤

1

√

2π

e

−t

2

/2

. (2.3)

Proof To obtain an upper bound on the tail

P

g ≥ t

=

1

√

2π

Z

∞

t

e

−x

2

/2

dx,

let us change variables x = t + y. This gives

P

g ≥ t

=

1

√

2π

Z

∞

0

e

−t

2

/2

e

−ty

e

−y

2

/2

dy ≤

1

√

2π

e

−t

2

/2

Z

∞

0

e

−ty

dy,

where we used that e

−y

2

/2

≤ 1. Since the last integral equals 1/t, the desired

upper bound on the tail follows.

The lower bound follows from the identity

Z

∞

t

(1 − 3x

−4

)e

−x

2

/2

dx =

1

t

−

1

t

3

e

−t

2

/2

.

This completes the proof.

14 Sums of independent random variables

Returning to (2.2), we see that we should expect the probability of having at

least

3

4

N heads to be smaller than

1

√

2π

e

−N/8

. (2.4)

This quantity decays to zero exponentially fast in N, which is much better than

the linear decay in (2.1) that follows from Chebyshev’s inequality.

Unfortunately, (2.4) does not follow rigorously from the central limit theorem.

Although the approximation by the normal density in (2.2) is valid, the error

of approximation can not be ignored. And, unfortunately, the error decays too

slow – even slower than linearly in N. This can be seen from the following sharp

quantitative version of the central limit theorem.

Theorem 2.1.3 (Berry-Esseen central limit theorem). In the setting of Theo-

rem 1.3.2, for every N and every t ∈ R we have

P

Z

N

≥ t

− P

g ≥ t

≤

ρ

√

N

.

Here ρ = E |X

1

− µ|

3

/σ

3

and g ∼ N(0, 1).

Thus the approximation error in (2.2) is of order 1/

√

N, which ruins the desired

exponential decay (2.4).

Can we improve the approximation error in central limit theorem? In general,

no. If N is even, then the probability of getting exactly N/2 heads is

P

S

N

= N/2

= 2

−N

N

N/2

!

≍

1

√

N

;

the last estimate can be obtained using Stirling’s approximation.

1

(Do it!) Hence,

P

Z

N

= 0

≍ 1/

√

N. On the other hand, since the normal distribution is con-

tinuous, we have P

g = 0

= 0. Thus the approximation error here has to be of

order 1/

√

N.

Let us summarize our situation. The Central Limit theorem offers an approx-

imation of a sum of independent random variables S

N

= X

1

+ . . . + X

N

by the

normal distribution. The normal distribution is especially nice due to its very

light, exponentially decaying tails. At the same time, the error of approxima-

tion in central limit theorem decays too slow, even slower than linear. This big

error is a roadblock toward proving concentration properties for S

N

with light,

exponentially decaying tails.

In order to resolve this issue, we develop alternative, direct approaches to

concentration, which bypass the central limit theorem.

1

Our somewhat informal notation f ≍ g stands for the equivalence of functions (functions of N in

this particular example) up to constant factors. Precisely, f ≍ g means that there exist positive

constants c, C such that the inequality cf (x) ≤ g(x) ≤ Cf(x) holds for all x, or sometimes for all

sufficiently large x. For similar one-sided inequalities that hold up to constant factors, we use

notation f ≲ g and f ≳ g.

2.2 Hoeffding’s inequality 15

Exercise 2.1.4 (Truncated normal distribution). K Let g ∼ N(0, 1). Show

that for all t ≥ 1, we have

E g

2

1

{g>t}

= t ·

1

√

2π

e

−t

2

/2

+ P

g > t

≤

t +

1

t

1

√

2π

e

−t

2

/2

.

Hint: Integrate by parts.

2.2 Hoeffding’s inequality

We start with a particularly simple concentration inequality, which holds for sums

of i.i.d. symmetric Bernoulli random variables.

Definition 2.2.1 (Symmetric Bernoulli distribution). A random variable X has

symmetric Bernoulli distribution (also called Rademacher distribution) if it takes

values −1 and 1 with probabilities 1/2 each, i.e.

P

X = −1

= P

X = 1

=

1

2

.

Clearly, a random variable X has the (usual) Bernoulli distribution with pa-

rameter 1/2 if and only if Z = 2X − 1 has symmetric Bernoulli distribution.

Theorem 2.2.2 (Hoeffding’s inequality). Let X

1

, . . . , X

N

be independent sym-

metric Bernoulli random variables, and a = (a

1

, . . . , a

N

) ∈ R

N

. Then, for any

t ≥ 0, we have

P

N

X

i=1

a

i

X

i

≥ t

≤ exp

−

t

2

2∥a∥

2

2

!

.

Proof We can assume without loss of generality that ∥a∥

2

= 1. (Why?)

Let us recall how we deduced Chebyshev’s inequality (Corollary 1.2.5): we

squared both sides and applied Markov’s inequality. Let us do something similar

here. But instead of squaring both sides, let us multiply by a fixed parameter

λ > 0 (to be chosen later) and exponentiate. This gives

P

N

X

i=1

a

i

X

i

≥ t

= P

exp

λ

N

X

i=1

a

i

X

i

≥ exp(λt)

≤ e

−λt

E exp

λ

N

X

i=1

a

i

X

i

. (2.5)

In the last step we applied Markov’s inequality (Proposition 1.2.4).

We thus reduced the problem to bounding the moment generating function

(MGF) of the sum

P

N

i=1

a

i

X

i

. As we recall from a basic probability course, the

MGF of the sum is the product of the MGF’s of the terms; this follows immedi-

ately from independence. Thus

E exp

λ

N

X

i=1

a

i

X

i

=

N

Y

i=1

E exp(λa

i

X

i

). (2.6)

16 Sums of independent random variables

Let us fix i. Since X

i

takes values −1 and 1 with probabilities 1/2 each, we

have

E exp(λa

i

X

i

) =

exp(λa

i

) + exp(−λa

i

)

2

= cosh(λa

i

).

Exercise 2.2.3 (Bounding the hyperbolic cosine). K Show that

cosh(x) ≤ exp(x

2

/2) for all x ∈ R.

Hint: Compare the Taylor’s expansions of both sides.

This bound shows that

E exp(λa

i

X

i

) ≤ exp(λ

2

a

2

i

/2).

Substituting into (2.6) and then into (2.5), we obtain

P

N

X

i=1

a

i

X

i

≥ t

≤ e

−λt

N

Y

i=1

exp(λ

2

a

2

i

/2) = exp

− λt +

λ

2

2

N

X

i=1

a

2

i

= exp

− λt +

λ

2

2

.

In the last identity, we used the assumption that ∥a∥

2

= 1.

This bound holds for arbitrary λ > 0. It remains to optimize in λ; the minimum

is clearly attained for λ = t. With this choice, we obtain

P

N

X

i=1

a

i

X

i

≥ t

≤ exp(−t

2

/2).

This completes the proof of Hoeffding’s inequality.

We can view Hoeffding’s inequality as a concentration version of the central

limit theorem. Indeed, the most we may expect from a concentration inequality

is that the tail of

P

a

i

X

i

behaves similarly to the tail of the normal distribu-

tion. And for all practical purposes, Hoeffding’s tail bound does that. With the

normalization ∥a∥

2

= 1, Hoeffding’s inequality provides the tail e

−t

2

/2

, which is

exactly the same as the bound for the standard normal tail in (2.3). This is good

news. We have been able to obtain the same exponentially light tails for sums as

for the normal distribution, even though the difference of these two distributions

is not exponentially small.

Armed with Hoeffding’s inequality, we can now return to Question 2.1.1 of

bounding the probability of at least

3

4

N heads in N tosses of a fair coin. After

rescaling from Bernoulli to symmetric Bernoulli, we obtain that this probability

is exponentially small in N, namely

P

at least

3

4

N heads

≤ exp(−N/8).

(Check this.)

2.2 Hoeffding’s inequality 17

Remark 2.2.4 (Non-asymptotic results). It should be stressed that unlike the

classical limit theorems of Probability Theory, Hoeffding’s inequality is non-

asymptotic in that it holds for all fixed N as opposed to N → ∞. The larger N,

the stronger inequality becomes. As we will see later, the non-asymptotic nature

of concentration inequalities like Hoeffding makes them attractive in applications

in data sciences, where N often corresponds to sample size.

We can easily derive a version of Hoeffding’s inequality for two-sided tails

P

|S| ≥ t

where S =

P

N

i=1

a

i

X

i

. Indeed, applying Hoeffding’s inequality for

−X

i

instead of X

i

, we obtain a bound on P

−S ≥ t

. Combining the two bounds,

we obtain a bound on

P

|S| ≥ t

= P

S ≥ t

+ P

−S ≥ t

.

Thus the bound doubles, and we obtain:

Theorem 2.2.5 (Hoeffding’s inequality, two-sided). Let X

1

, . . . , X

N

be indepen-

dent symmetric Bernoulli random variables, and a = (a

1

, . . . , a

N

) ∈ R

N

. Then,

for any t > 0, we have

P

N

X

i=1

a

i

X

i

≥ t

≤ 2 exp

−

t

2

2∥a∥

2

2

!

.

Our proof of Hoeffding’s inequality, which is based on bounding the moment

generating function, is quite flexible. It applies far beyond the canonical exam-

ple of symmetric Bernoulli distribution. For example, the following extension of

Hoeffding’s inequality is valid for general bounded random variables.

Theorem 2.2.6 (Hoeffding’s inequality for general bounded random variables).

Let X

1

, . . . , X

N

be independent random variables. Assume that X

i

∈ [m

i

, M

i

] for

every i. Then, for any t > 0, we have

P

N

X

i=1

(X

i

− E X

i

) ≥ t

≤ exp

−

2t

2

P

N

i=1

(M

i

− m

i

)

2

!

.

Exercise 2.2.7. KK Prove Theorem 2.2.6, possibly with some absolute con-

stant instead of 2 in the tail.

Exercise 2.2.8 (Boosting randomized algorithms). KK Imagine we have an

algorithm for solving some decision problem (e.g. is a given number p a prime?).

Suppose the algorithm makes a decision at random and returns the correct answer

with probability

1

2

+ δ with some δ > 0, which is just a bit better than a random

guess. To improve the performance, we run the algorithm N times and take the

majority vote. Show that, for any ε ∈ (0, 1), the answer is correct with probability

at least 1 − ε, as long as

N ≥

1

2δ

2

ln

1

ε

.

Hint: Apply Hoeffding’s inequality for X

i

being the indicators of the wrong answers.

18 Sums of independent random variables

Exercise 2.2.9 (Robust estimation of the mean). KKK Suppose we want to

estimate the mean µ of a random variable X from a sample X

1

, . . . , X

N

drawn

independently from the distribution of X. We want an ε-accurate estimate, i.e.

one that falls in the interval (µ − ε, µ + ε).

(a) Show that a sample

2

of size N = O(σ

2

/ε

2

) is sufficient to compute an

ε-accurate estimate with probability at least 3/4, where σ

2

= Var X.

Hint: Use the sample mean ˆµ

:

=

1

N

P

N

i=1

X

i

.

(b) Show that a sample of size N = O(log(δ

−1

)σ

2

/ε

2

) is sufficient to compute

an ε-accurate estimate with probability at least 1 − δ.

Hint: Use the median of O(log(δ

−1

)) weak estimates from part 1.

Exercise 2.2.10 (Small ball probabilities). KK Let X

1

, . . . , X

N

be non-negative

independent random variables with continuous distributions. Assume that the

densities of X

i

are uniformly bounded by 1.

(a) Show that the MGF of X

i

satisfies

E exp(−tX

i

) ≤

1

t

for all t > 0.

(b) Deduce that, for any ε > 0, we have

P

N

X

i=1

X

i

≤ εN

≤ (eε)

N

.

Hint: Rewrite the inequality

P

X

i

≤ εN as

P

(−X

i

/ε) ≥ −N and proceed like in the proof of Hoeffd-

ing’s inequality. Use part 1 to bound the MGF.

2.3 Chernoff’s inequality

As we noted, Hoeffding’s inequality is quite sharp for symmetric Bernoulli ran-

dom variables. But the general form of Hoeffding’s inequality (Theorem 2.2.6) is

sometimes too conservative and does not give sharp results. This happens, for

example, when X

i

are Bernoulli random variables with parameters p

i

so small

that we expect S

N

to have approximately Poisson distribution according to The-

orem 1.3.4. However, Hoeffding’s inequality is not sensitive to the magnitudes of

p

i

, and the Gaussian tail bound it gives is very far from the true, Poisson, tail. In

this section we study Chernoff’s inequality, which is sensitive to the magnitudes

of p

i

.

Theorem 2.3.1 (Chernoff’s inequality). Let X

i

be independent Bernoulli random

variables with parameters p

i

. Consider their sum S

N

=

P

N

i=1

X

i

and denote its

mean by µ = E S

N

. Then, for any t > µ, we have

P

S

N

≥ t

≤ e

−µ

eµ

t

t

.

2

More accurately, this claim means that there exists an absolute constant C such that if N ≥ Cσ

2

/ε

2

then P

n

|ˆµ − µ| ≤ ε

o

≥ 3/4. Here ˆµ is the sample mean; see the hint.

2.3 Chernoff’s inequality 19

Proof We will use the same method – based on the moment generating function

– as we did in the proof of Hoeffding’s inequality, Theorem 2.2.2. We repeat the

first steps of that argument, leading to (2.5) and (2.6): multiply both sides of

the inequality S

N

≥ t by a parameter λ, exponentiate, and then use Markov’s

inequality and independence. This gives

P

S

N

≥ t

≤ e

−λt

N

Y

i=1

E exp(λX

i

). (2.7)

It remains to bound the MGF of each Bernoulli random variable X

i

separately.

Since X

i

takes value 1 with probability p

i

and value 0 with probability 1 −p

i

, we

have

E exp(λX

i

) = e

λ

p

i

+ (1 − p

i

) = 1 + (e

λ

− 1)p

i

≤ exp

h

(e

λ

− 1)p

i

i

.

In the last step, we used the numeric inequality 1 + x ≤ e

x

. Consequently,

N

Y

i=1

E exp(λX

i

) ≤ exp

(e

λ

− 1)

N

X

i=1

p

i

= exp

h

(e

λ

− 1)µ

i

.

Substituting this into (2.7), we obtain

P

S

N

≥ t

≤ e

−λt

exp

h

(e

λ

− 1)µ

i

.

This bound holds for any λ > 0. Substituting the value λ = ln(t/µ) which is

positive by the assumption t > µ and simplifying the expression, we complete

the proof.

Exercise 2.3.2 (Chernoff’s inequality: lower tails). KK Modify the proof of

Theorem 2.3.1 to obtain the following bound on the lower tail. For any t < µ, we

have

P

S

N

≤ t

≤ e

−µ

eµ

t

t

.

Exercise 2.3.3 (Poisson tails). KK Let X ∼ Pois(λ). Show that for any t > λ,

we have

P

X ≥ t

≤ e

−λ

eλ

t

t

. (2.8)

Hint: Combine Chernoff’s inequality with Poisson limit theorem (Theorem 1.3.4).

Remark 2.3.4 (Poisson tails). Note that the Poisson tail bound (2.8) is quite

sharp. Indeed, the probability mass function (1.8) of X ∼ Pois(λ) can be approx-

imated via Stirling’s formula k! ≈

√

2πk(k/e)

k

as follows:

P

X = k

≈

1

√

2πk

· e

−λ

eλ

k

k

. (2.9)

So our bound (2.8) on the entire tail of X has essentially the same form as the

probability of hitting one value k (the smallest one) in that tail. The difference

20 Sums of independent random variables

between these two quantities is the multiple

√

2πk, which is negligible since both

these quantities are exponentially small in k.

Exercise 2.3.5 (Chernoff’s inequality: small deviations). KKK Show that, in

the setting of Theorem 2.3.1, for δ ∈ (0, 1] we have

P

|S

N

− µ| ≥ δµ

≤ 2e

−cµδ

2

where c > 0 is an absolute constant.

Hint: Apply Theorem 2.3.1 and Exercise 2.3.2 t = (1 ± δ)µ and analyze the bounds for small δ.

Exercise 2.3.6 (Poisson distribution near the mean). K Let X ∼ Pois(λ).

Show that for t ∈ (0, λ], we have

P

|X − λ| ≥ t

≤ 2 exp

−

ct

2

λ

!

.

Hint: Combine Exercise 2.3.5 with the Poisson limit theorem (Theorem 1.3.4).

Remark 2.3.7 (Large and small deviations). Exercises 2.3.3 and 2.3.6 indicate

two different behaviors of the tail of the Poisson distribution Pois(λ). In the small

deviation regime, near the mean λ, the tail of Pois(λ) is like that for the normal

distribution N (λ, λ). In the large deviation regime, far to the right from the mean,

the tail is heavier and decays like (λ/t)

t

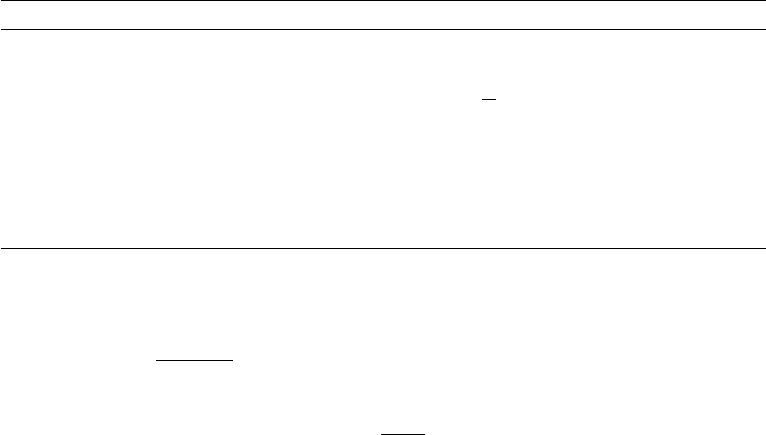

; see Figure 2.1.

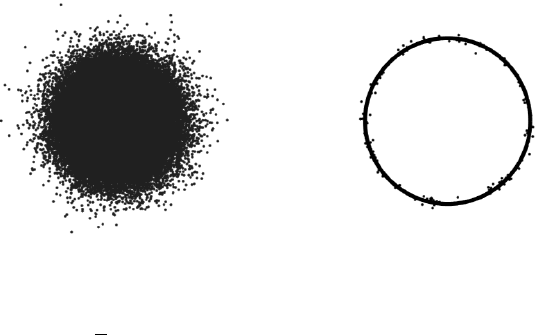

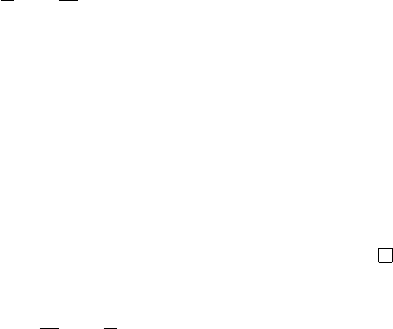

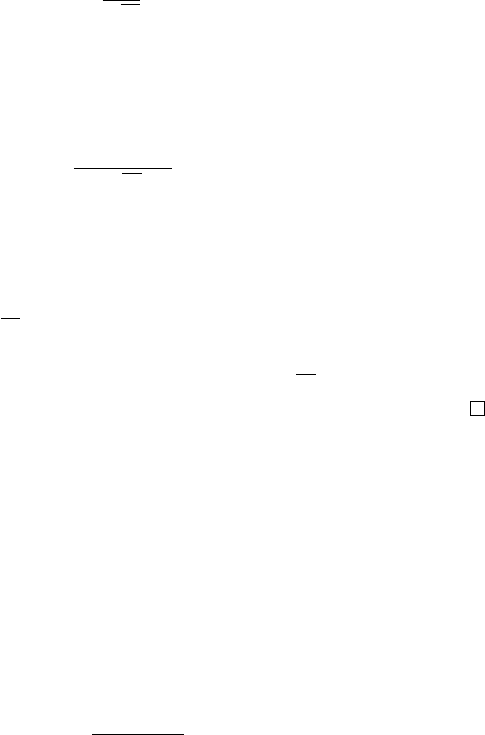

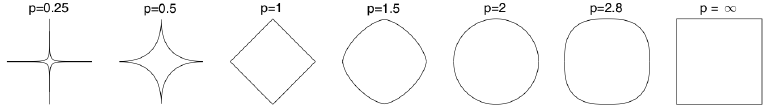

Figure 2.1 The probability mass function of the Poisson distribution

Pois(λ) with λ = 10. The distribution is approximately normal near the

mean λ, but to the right from the mean the tails are heavier.

Exercise 2.3.8 (Normal approximation to Poisson). KK Let X ∼ Pois(λ).

Show that, as λ → ∞, we have

X − λ

√

λ

→ N(0, 1) in distribution.

Hint: Derive this from the central limit theorem. Use the fact that the sum of independent Poisson

distributions is a Poisson distribution.

2.4 Application: degrees of random graphs 21

2.4 Application: degrees of random graphs

We give an application of Chernoff’s inequality to a classical object in probability:

random graphs.

The most thoroughly studied model of random graphs is the classical Erd¨os-

R´enyi model G(n, p), which is constructed on a set of n vertices by connecting

every pair of distinct vertices independently with probability p. Figure 2.2 shows

an example of a random graph G ∼ G(n, p). In applications, the Erd¨os-R´enyi

model often appears as the simplest stochastic model for large, real-world net-

works.

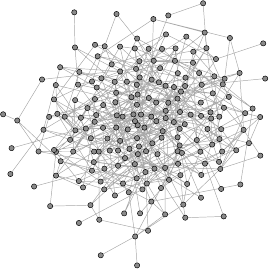

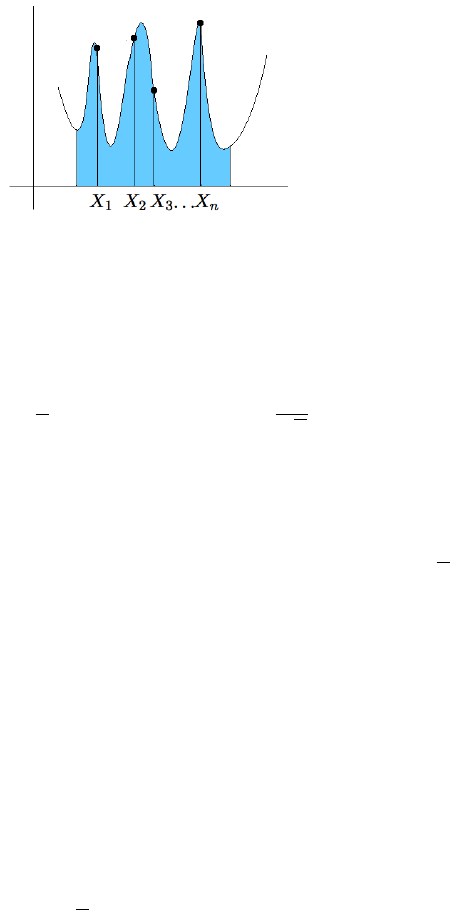

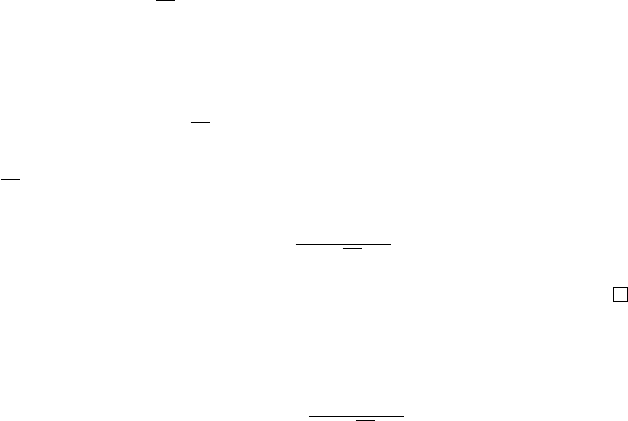

Figure 2.2 A random graph from Erd¨os-R´enyi model G(n, p) with n = 200

and p = 1/40.

The degree of a vertex in the graph is the number of edges incident to that

vertex. The expected degree of every vertex in G(n, p) clearly equals

(n − 1)p =

:

d.

(Check!) We will show that relatively dense graphs, those where d ≳ log n, are

almost regular with high probability, which means that the degrees of all vertices

approximately equal d.

Proposition 2.4.1 (Dense graphs are almost regular). There is an absolute con-

stant C such that the following holds. Consider a random graph G ∼ G(n, p) with

expected degree satisfying d ≥ C log n. Then, with high probability (for example,

0.9), the following occurs: all vertices of G have degrees between 0.9d and 1.1d.

Proof The argument is a combination of Chernoff’s inequality with a union

bound. Let us fix a vertex i of the graph. The degree of i, which we denote d

i

, is

a sum of n −1 independent Ber(p) random variables (the indicators of the edges

incident to i). Thus we can apply Chernoff’s inequality, which yields

P

|d

i

− d| ≥ 0.1d

≤ 2e

−cd

.

(We used the version of Chernoff’s inequality given in Exercise 2.3.5 here.)

22 Sums of independent random variables

This bound holds for each fixed vertex i. Next, we can “unfix” i by taking the

union bound over all n vertices. We obtain

P

∃i ≤ n

:

|d

i

− d| ≥ 0.1d

≤

n

X

i=1

P

|d

i

− d| ≥ 0.1d

≤ n · 2e

−cd

.

If d ≥ C log n for a sufficiently large absolute constant C, the probability is

bounded by 0.1. This means that with probability 0.9, the complementary event

occurs, and we have

P

∀i ≤ n

:

|d

i

− d| < 0.1d

≥ 0.9.

This completes the proof.

Sparser graphs, those for which d = o(log n), are no longer almost regular, but

there are still useful bounds on their degrees. The following series of exercises

makes these claims clear. In all of them, we shall assume that the graph size n

grows to infinity, but we don’t assume the connection probability p to be constant

in n.

Exercise 2.4.2 (Bounding the degrees of sparse graphs). K Consider a random

graph G ∼ G(n, p) with expected degrees d = O(log n). Show that with high

probability (say, 0.9), all vertices of G have degrees O(log n).

Hint: Modify the proof of Proposition 2.4.1.

Exercise 2.4.3 (Bounding the degrees of very sparse graphs). KK Consider a

random graph G ∼ G(n, p) with expected degrees d = O(1). Show that with high

probability (say, 0.9), all vertices of G have degrees

O

log n

log log n

.

Now we pass to the lower bounds. The next exercise shows that Proposi-

tion 2.4.1 does not hold for sparse graphs.

Exercise 2.4.4 (Sparse graphs are not almost regular). KKK Consider a ran-

dom graph G ∼ G(n, p) with expected degrees d = o(log n). Show that with high

probability, (say, 0.9), G has a vertex with degree

3

10d.

Hint: The principal difficulty is that the degrees d

i

are not independent. To fix this, try to replace d

i

by some d

′

i

that are independent. (Try to include not all vertices in the counting.) Then use Poisson

approximation (2.9).

Moreover, very sparse graphs, those for which d = O(1), are even farther from

regular. The next exercise gives a lower bound on the degrees that matches the

upper bound we gave in Exercise 2.4.3.

Exercise 2.4.5 (Very sparse graphs are far from being regular). KK Consider

3

We assume here that 10d is an integer. There is nothing particular about the factor 10; it can be

replaced by any other constant.

2.5 Sub-gaussian distributions 23

a random graph G ∼ G(n, p) with expected degrees d = O(1). Show that with

high probability, (say, 0.9), G has a vertex with degree

Ω

log n

log log n

.

2.5 Sub-gaussian distributions

So far, we have studied concentration inequalities that apply only for Bernoulli

random variables X

i

. It would be useful to extend these results for a wider class of

distributions. At the very least, we may expect that the normal distribution be-

longs to this class, since we think of concentration results as quantitative versions

of the central limit theorem.

So let us ask: which random variables X

i

must obey a concentration inequality

like Hoeffding’s in Theorem 2.2.5, namely

P

N

X

i=1

a

i

X

i

≥ t

≤ 2 exp

−

ct

2

∥a∥

2

2

!

?

If the sum

P

N

i=1

a

i

X

i

consists of a single term X

i

, this inequality reads as

P

|X

i

| > t

≤ 2e

−ct

2

.

This gives us an automatic restriction: if we want Hoeffding’s inequality to hold,

we must assume that the random variables X

i

have sub-gaussian tails.

This class of such distributions, which we call sub-gaussian, deserves special

attention. This class is sufficiently wide as it contains Gaussian, Bernoulli and

all bounded distributions. And, as we will see shortly, concentration results like

Hoeffding’s inequality can indeed be proved for all sub-gaussian distributions.

the canonical, class where one can develop various results in high-dimensional

probability theory and its applications.

We now explore several equivalent approaches to sub-gaussian distributions,

examining the behavior of their tails, moments, and moment generating functions.

To pave our way, let us recall how these quantities behave for the standard normal

distribution.

Let X ∼ N(0, 1). Then using (2.3) and symmetry, we obtain the following tail

bound:

P

|X| ≥ t

≤ 2e

−t

2

/2

for all t ≥ 0. (2.10)

(Deduce this formally!) In the next exercise, we obtain a bound on the absolute

moments and L

p

norms of the normal distribution.

Exercise 2.5.1 (Moments of the normal distribution). KK Show that for each

24 Sums of independent random variables

p ≥ 1, the random variable X ∼ N(0, 1) satisfies

∥X∥

L

p

= (E |X|

p

)

1/p

=

√

2

Γ((1 + p)/2)

Γ(1/2)

1/p

.

Deduce that

∥X∥

L

p

= O(

√

p) as p → ∞. (2.11)

Finally, a classical formula gives the moment generating function of X ∼

N(0, 1):

E exp(λX) = e

λ

2

/2

for all λ ∈ R. (2.12)

2.5.1 Sub-gaussian properties

Now let X be a general random variable. The following proposition states that

the properties we just considered are equivalent – a sub-gaussian tail decay as

in (2.10), the growth of moments as in (2.11), and the growth of the moment

generating function as in (2.12). The proof of this result is quite useful; it shows

how to transform one type of information about random variables into another.

Proposition 2.5.2 (Sub-gaussian properties). Let X be a random variable. Then

the following properties are equivalent; the parameters K

i

> 0 appearing in these

properties differ from each other by at most an absolute constant factor.

4

(i) There exists K

1

> 0 such that the tails of X satisfy

P{|X| ≥ t} ≤ 2 exp(−t

2

/K

2

1

) for all t ≥ 0.

(ii) There exists K

2

> 0 such that the moments of X satisfy

∥X∥

L

p

= (E |X|

p

)

1/p

≤ K

2

√

p for all p ≥ 1.

(iii) There exists K

3

> 0 such that the MGF of X

2

satisfies

E exp(λ

2

X

2

) ≤ exp(K

2

3

λ

2

) for all λ such that |λ| ≤

1

K

3

.

(iv) There exists K

4

> 0 such that the MGF of X

2

is bounded at some point,

namely

E exp(X

2

/K

2

4

) ≤ 2.

Moreover, if E X = 0 then properties i–iv are also equivalent to the following one.

(v) There exists K

5

> 0 such that the MGF of X satisfies

E exp(λX) ≤ exp(K

2

5

λ

2

) for all λ ∈ R.

4

The precise meaning of this equivalence is the following. There exists an absolute constant C such

that property i implies property j with parameter K

j

≤ CK

i

for any two properties i, j = 1, . . . , 5.

2.5 Sub-gaussian distributions 25

Proof i ⇒ ii. Assume property i holds. By homogeneity, and rescaling X to

X/K

1

we can assume that K

1

= 1. Applying the integral identity (Lemma 1.2.1)

for |X|

p

, we obtain

E |X|

p

=

Z

∞

0

P{|X|

p

≥ u}du

=

Z

∞

0

P{|X| ≥ t}pt

p−1

dt (by change of variables u = t

p

)

≤

Z

∞

0

2e

−t

2

pt

p−1

dt (by property i)

= pΓ(p/2) (set t

2

= s and use definition of Gamma function)

≤ 3p(p/2)

p/2

(since Γ(x) ≤ 3x

x

for all x ≥ 1/2).

Taking the p-th root yields property ii with K

2

≤ 3.

ii ⇒ iii. Assume property ii holds. As before, by homogeneity we may assume

that K

2

= 1. Recalling the Taylor series expansion of the exponential function,

we obtain

E exp(λ

2

X

2

) = E

1 +

∞

X

p=1

(λ

2

X

2

)

p

p!

= 1 +

∞

X

p=1

λ

2p

E[X

2p

]

p!

.

Property ii guarantees that E[X

2p

] ≤ (2p)

p

, while Stirling’s approximation yields

p! ≥ (p/e)

p

. Substituting these two bounds, we get

E exp(λ

2

X

2

) ≤ 1 +

∞

X

p=1

(2λ

2

p)

p

(p/e)

p

=

∞

X

p=0

(2eλ

2

)

p

=

1

1 − 2eλ

2

provided that 2eλ

2

< 1, in which case the geometric series above converges. To

bound this quantity further, we can use the numeric inequality 1/(1 − x) ≤ e

2x

,

which is valid for x ∈ [0, 1/2]. It follows that

E exp(λ

2

X

2

) ≤ exp(4eλ

2

) for all λ satisfying |λ| ≤

1

2

√

e

.

This yields property iii with K

3

= 2

√

e.

iii ⇒ iv is trivial.

iv ⇒ i. Assume property iv holds. As before, we may assume that K

4

= 1.

Then

P{|X| ≥ t} = P{e

X

2

≥ e

t

2

}

≤ e

−t

2

E e

X

2

(by Markov’s inequality, Proposition 1.2.4)

≤ 2e

−t

2

(by property iv).

This proves property i with K

1

= 1.

To prove the second part of the proposition, we show that iii ⇒ v and v ⇒ i.

26 Sums of independent random variables

iii ⇒ v. Assume that property iii holds; as before we can assume that K

3

= 1.

Let us use the numeric inequality e

x

≤ x + e

x

2

, which is valid for all x ∈ R. Then

E e

λX

≤ E

h

λX + e

λ

2

X

2

i

= E e

λ

2

X

2

(since E X = 0 by assumption)

≤ e

λ

2

if |λ| ≤ 1,

where in the last line we used property iii. Thus we have proved property v in the

range |λ| ≤ 1. Now assume that |λ| ≥ 1. Here we can use the numeric inequality

2λx ≤ λ

2

+ x

2

, which is valid for all λ and x. It follows that

E e

λX

≤ e

λ

2

/2

E e

X

2

/2

≤ e

λ

2

/2

· exp(1/2) (by property iii)

≤ e

λ

2

(since |λ| ≥ 1).

This proves property v with K

5

= 1.

v ⇒ i. Assume property v holds; we can assume that K

5

= 1. We will use

some ideas from the proof of Hoeffding’s inequality (Theorem 2.2.2). Let λ > 0

be a parameter to be chosen later. Then

P{X ≥ t} = P{e

λX

≥ e

λt

}

≤ e

−λt

E e

λX

(by Markov’s inequality)

≤ e

−λt

e

λ

2

(by property v)

= e

−λt+λ

2

.

Optimizing in λ and thus choosing λ = t/2, we conclude that

P{X ≥ t} ≤ e

−t

2

/4

.

Repeating this argument for −X, we also obtain P{X ≤ −t} ≤ e

−t

2

/4

. Combining

these two bounds we conclude that

P{|X| ≥ t} ≤ 2e

−t

2

/4

.

Thus property i holds with K

1

= 2. The proposition is proved.

Remark 2.5.3. The constant 2 that appears in some properties in Proposi-

tion 2.5.2 does not have any special meaning; it can be replaced by any other

absolute constant that is larger than 1. (Check!)

Exercise 2.5.4. KK Show that the condition E X = 0 is necessary for prop-

erty v to hold.

Exercise 2.5.5 (On property iii in Proposition 2.5.2). KK

(a) Show that if X ∼ N(0, 1), the function λ 7→ E exp(λ

2

X

2

) is only finite in

some bounded neighborhood of zero.

(b) Suppose that some random variable X satisfies E exp(λ

2

X

2

) ≤ exp(Kλ

2

)

for all λ ∈ R and some constant K. Show that X is a bounded random

variable, i.e. ∥X∥

∞

< ∞.

2.5 Sub-gaussian distributions 27

2.5.2 Definition and examples of sub-gaussian distributions

Definition 2.5.6 (Sub-gaussian random variables). A random variable X that

satisfies one of the equivalent properties i–iv in Proposition 2.5.2 is called a sub-

gaussian random variable. The sub-gaussian norm of X, denoted ∥X∥

ψ

2

, is defined

to be the smallest K

4

in property iv. In other words, we define

∥X∥

ψ

2

= inf

t > 0

:

E exp(X

2

/t

2

) ≤ 2

. (2.13)

Exercise 2.5.7. KK Check that ∥ · ∥

ψ

2

is indeed a norm on the space of sub-

gaussian random variables.

Let us restate Proposition 2.5.2 in terms of the sub-gaussian norm. It states

that every sub-gaussian random variable X satisfies the following bounds:

P{|X| ≥ t} ≤ 2 exp(−ct

2

/∥X∥

2

ψ

2

) for all t ≥ 0; (2.14)

∥X∥

L

p

≤ C∥X∥

ψ

2

√

p for all p ≥ 1; (2.15)

E exp(X

2

/∥X∥

2

ψ

2

) ≤ 2;

if E X = 0 then E exp(λX) ≤ exp(Cλ

2

∥X∥

2

ψ

2

) for all λ ∈ R. (2.16)

Here C, c > 0 are absolute constants. Moreover, up to absolute constant factors,

∥X∥

ψ

2

is the smallest possible number that makes each of these inequalities valid.

Example 2.5.8. Here are some classical examples of sub-gaussian distributions.

(a) (Gaussian): As we already noted, X ∼ N (0, 1) is a sub-gaussian random

variable with ∥X∥

ψ

2

≤ C, where C is an absolute constant. More generally,

if X ∼ N(0, σ

2

) then X is sub-gaussian with

∥X∥

ψ

2

≤ Cσ.

(Why?)

(b) (Bernoulli): Let X be a random variable with symmetric Bernoulli dis-

tribution (recall Definition 2.2.1). Since |X| = 1, it follows that X is a

sub-gaussian random variable with

∥X∥

ψ

2

=

1

√

ln 2

.

(c) (Bounded): More generally, any bounded random variable X is sub-

gaussian with

∥X∥

ψ

2

≤ C∥X∥

∞

(2.17)

where C = 1/

√

ln 2.

Exercise 2.5.9. K Check that Poisson, exponential, Pareto and Cauchy dis-

tributions are not sub-gaussian.

28 Sums of independent random variables

Exercise 2.5.10 (Maximum of sub-gaussians). KKK Let X

1

, X

2

, . . . , be a se-

quence of sub-gaussian random variables, which are not necessarily independent.

Show that

E max

i

|X

i

|

√

1 + log i

≤ CK,

where K = max

i

∥X

i

∥

ψ

2

. Deduce that for every N ≥ 2 we have

E max

i≤N

|X

i

| ≤ CK

p

log N.

Hint: Denote Y

i

:

= X

i

/(CK

√

1 + log i) with absolute constant C chosen sufficiently large. Use subgaus-

sian tail bound (2.14)and then a union bound to conclude that P

n

∃i

:

|Y

i

| ≥ t

o

≲ e

−t

2

for any t ≥ 1.

Use the integrated tail formula (Lemma 1.2.1), breaking the integral into two integrals: one over [0, 1]

(whose value should be trivial to bound) and the other over [1, ∞) (where you can use the tail bound

obtained before).

Exercise 2.5.11 (Lower bound). KK Show that the bound in Exercise 2.5.10

is sharp. Let X

1

, X

2

, . . . , X

N

be independent N(0, 1) random variables. Prove

that

E max

i≤N

X

i

≥ c

p

log N.

2.6 General Hoeffding’s and Khintchine’s inequalities

After all the work we did characterizing sub-gaussian distributions in the pre-

vious section, we can now easily extend Hoeffding’s inequality (Theorem 2.2.2)

to general sub-gaussian distributions. But before we do this, let us deduce an

important rotation invariance property of sums of independent sub-gaussians.

In the first probability course, we learned that a sum of independent normal

random variables X

i

is normal. Indeed, if X

i

∼ N(0, σ

2

i

) are independent then

N

X

i=1

X

i

∼ N

0,

N

X

i=1

σ

2

i

. (2.18)